This article explores in detail how the Shoumao technology team combines large language models (LLM) with AI Agent technology, addressing the problems encountered, thought strategies, and practical cases throughout the process. The article first introduces the concept of an AI Agent, defined as Agent = LLM + memory + planning skills + tool usage, emphasizing that an Agent needs to have the ability to perceive the environment, make decisions, and take appropriate actions. Next, the article elaborates on the decision-making process of an Agent, which includes three steps: perception, planning, and action, illustrating the execution process of an Agent through specific cases. In an LLM-driven Agent system, the LLM acts as the brain, supplemented by key components such as planning, memory, and tool usage.

Over the past year, the Shoumao team has started to focus on AI technology trends, exploring the combination of Agent technology and shopping group hand business. The article provides a detailed account of the technical challenges, ideas, and practices encountered by Shoumao in integrating Agent capabilities with intelligent assistant services. It presents the end-display solution, Agent abstraction and management, and the construction of an Agent laboratory. In addition, the article discusses the classification, definition, and exception handling of tools, as well as the concept and pros and cons of tool granularity, summarizing the considerations for ensuring tool security.

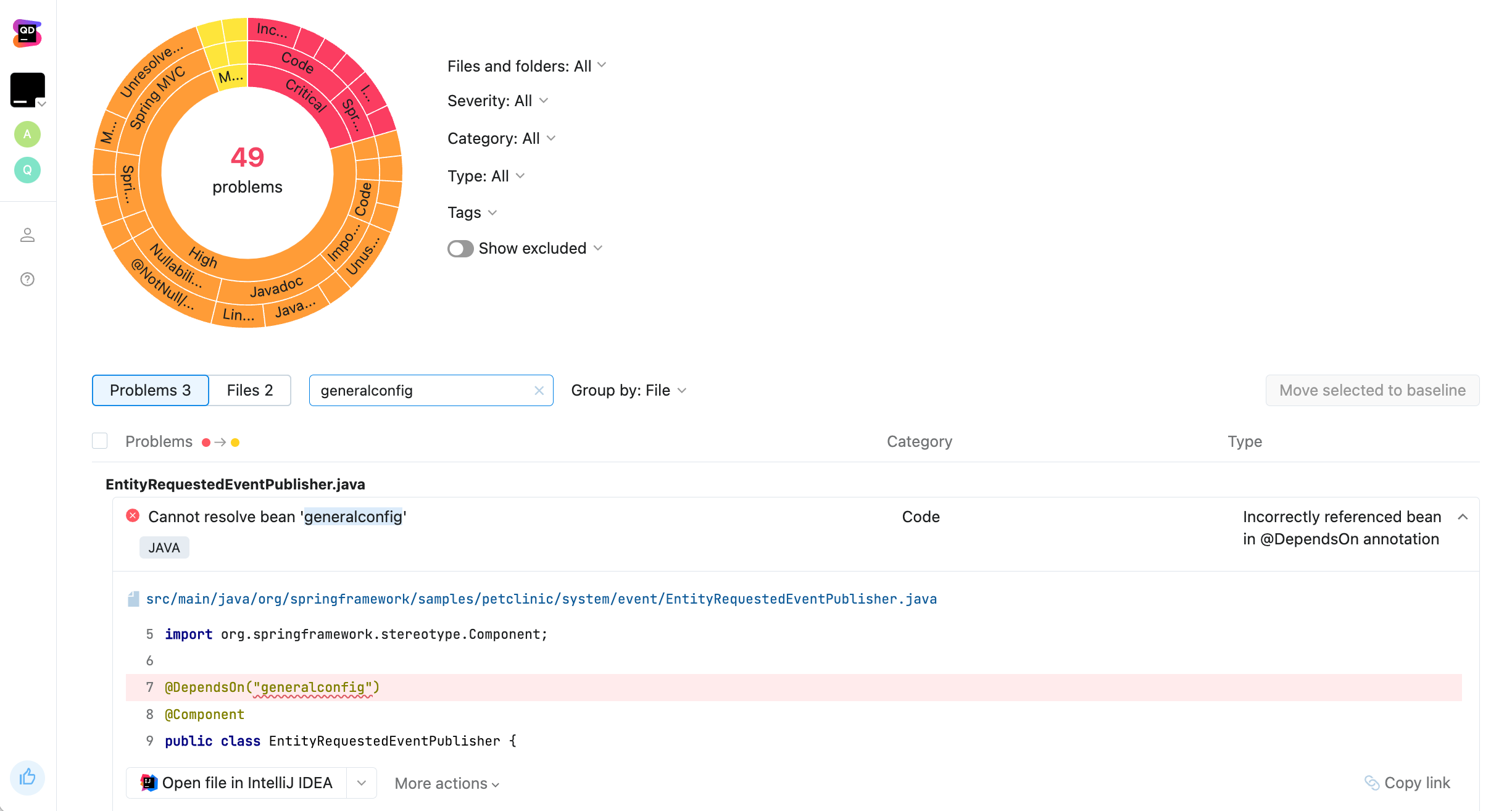

During the project's launch and iteration process, the Shoumao team encountered several issues, including high requirements for result accuracy, structural display error rates when the large model outputs directly to the end, instability in the Agent's understanding of tools, and the complexity requirements for tool returns by the LLM.

/filters:no_upscale()/articles/trade-offs-minimizing-unhappiness/en/resources/22fig1-1717160045706.jpg)