👋 Dear friends, welcome to this issue of AI Field Highlights!

This week, we've carefully selected 30 insightful articles from the artificial intelligence domain, offering a panoramic view of the latest breakthroughs and trends to help you stay ahead of the curve and grasp the pulse of AI development. This week, the AI field was buzzing with activity. Model competition has intensified , with giants like Google, Meta, and Kimi unveiling new offerings. Focus areas include Mixture-of-Experts (MoE) architectures, multimodal capabilities, and ultra-long context windows . Simultaneously, the AI Agent ecosystem is rapidly maturing , with infrastructure improvements spanning foundational theory dissemination, development frameworks, cloud platform services (like AutoRAG, full-cycle MCP), and collaboration protocols (A2A). Furthermore, advancements in RAG technology, innovations in development paradigms like prompt engineering and Vibe Coding, the emergence of AI-native products (in audio/video and CRM), deep industry reports, and insights from leaders collectively paint a comprehensive picture of this week's AI landscape.

This Week's Highlights:

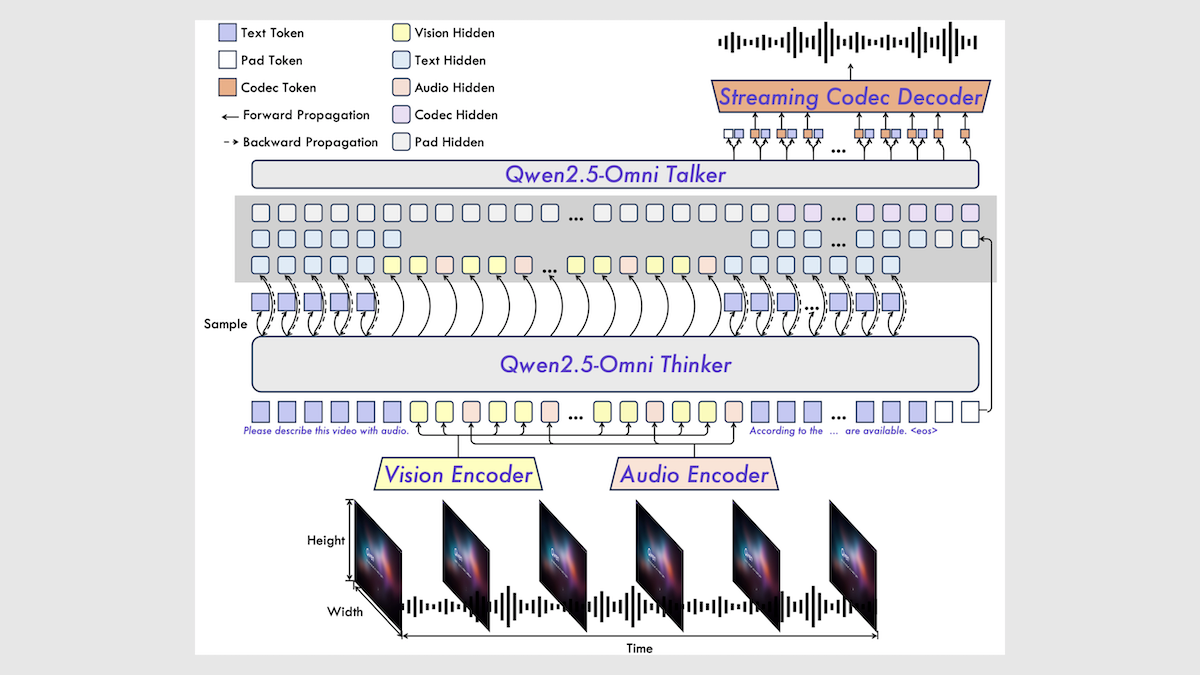

- Model Race Escalates, Focusing on Multimodality & Efficient Reasoning : Google released Gemini 2.5 Flash/Pro, video model Veo 2, image model Imagen 3, and audio model Chirp 3. Meta open-sourced the Llama 4 series, featuring MoE architecture and an impressive 10M token context. Kimi open-sourced its 16B visual model Kimi-VL, also using MoE and activating only 2.8B parameters during inference, showcasing high efficiency and strong reasoning.

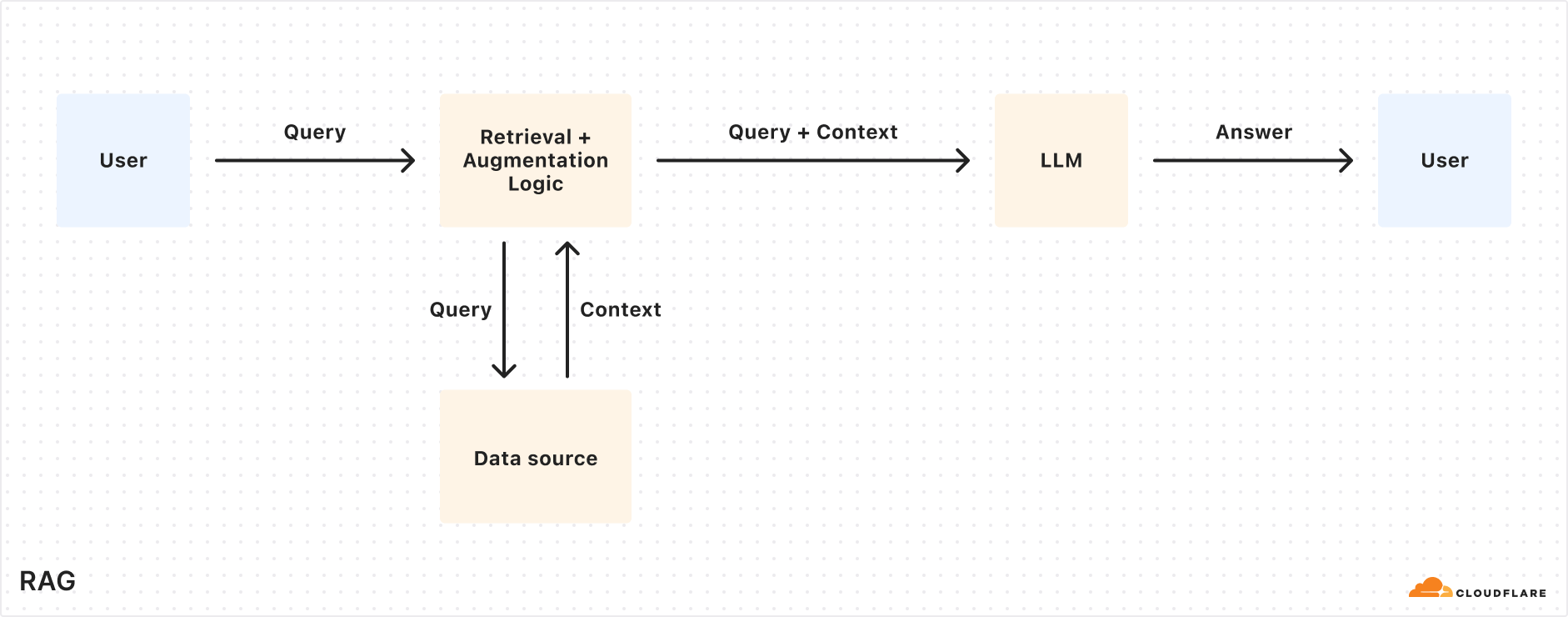

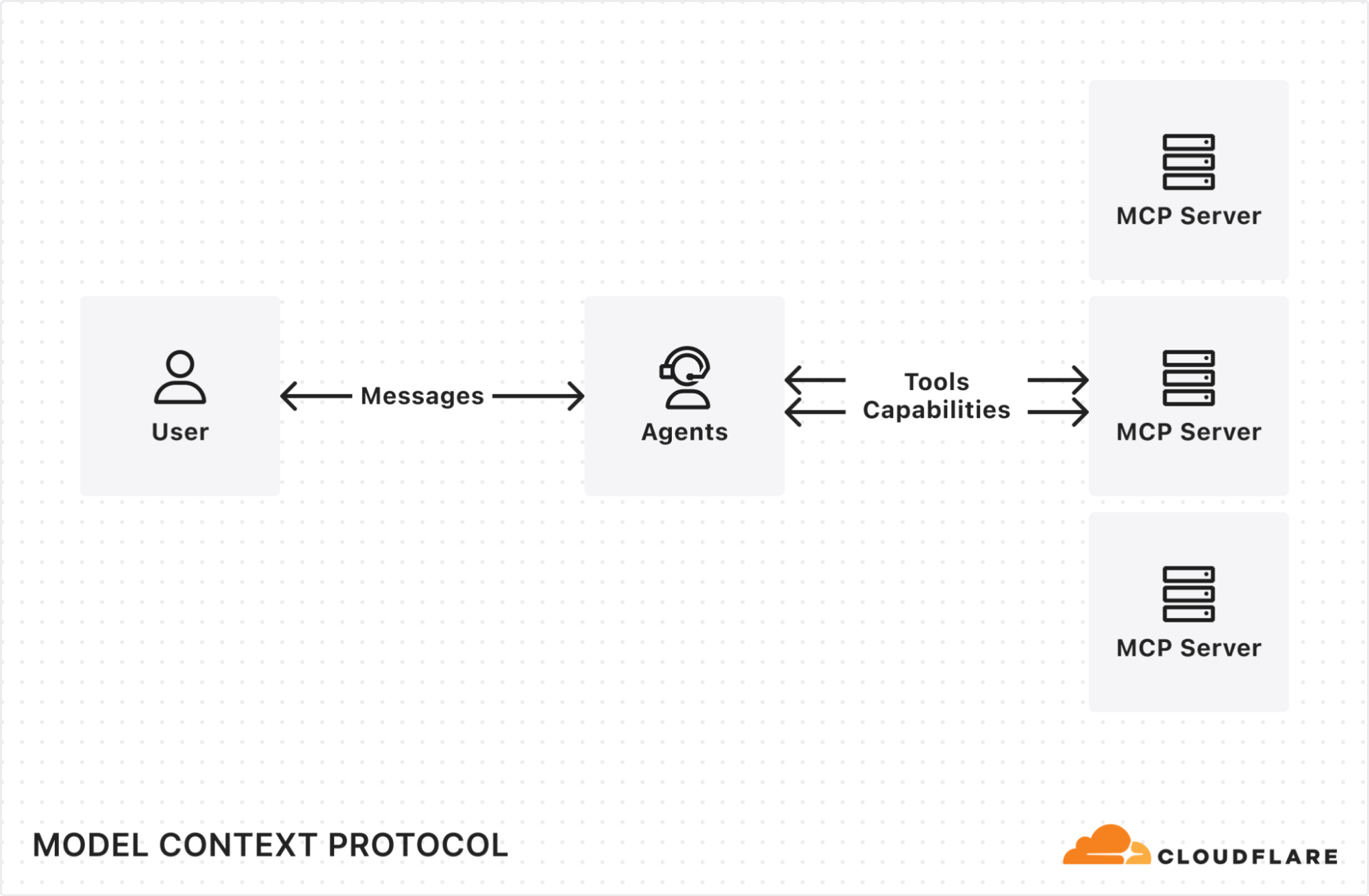

- AI Agent Ecosystem Construction Accelerates with Maturing Infrastructure : From theory popularization (Prof. Hung-yi Lee's new course) to practical frameworks, AI Agent development is advancing rapidly. Google launched the Agent Development Kit (ADK) and the Agent-to-Agent collaboration protocol (A2A). Cloudflare introduced the fully managed RAG service AutoRAG and enhanced its Agent SDK (supporting remote MCP, authentication, free tier for Durable Objects). Alibaba Cloud's Bailian platform launched a full-cycle MCP service, offering one-stop hosting for AI tools.

- Deep Dive into Agent Concepts: Challenges, Opportunities & Future Forms : The industry deeply explored the drivers (model reasoning, multimodality, code capabilities) and challenges (engineering implementation, model bottlenecks) of the Agent technology boom, pondering what kind of Agent will prevail (simple & general preferred over complex). Rabbit founder Jesse Lyu outlined his vision for RabbitOS Intern, an Agent-based OS aiming to disrupt traditional app interaction. The need for specialized browsers for AI Agents was also proposed and discussed.

- RAG Technology Evolves, Moving Towards Multimodality & Intelligence : RAG continues to evolve as a key technology for enhancing large model performance. Researchers explored the four core propositions of RAG development (data value, heterogeneous retrieval, generation control, evaluation systems) and future directions like multimodal retrieval and deep search. Jina AI released jina-reranker-m0, a next-gen multimodal, multilingual reranker capable of assessing relevance based on both text and visual elements.

- Prompt Engineering & New Coding Paradigms Gain Attention : Google released its official Prompt Engineering whitepaper, systematically covering concepts, configurations, techniques, and best practices. The emerging "Vibe coding" paradigm (collaborative coding with AI via natural language) gained traction, with Shopify's CEO even making AI proficiency a basic job requirement integrated into performance reviews. Articles also provided Vibe coding tips and prompt examples, including a collection for GPT-4o image generation.

- AI-Native Products Emerge, Reshaping Vertical Sectors : The AI-powered audio/video creation app Captions achieved rapid growth with unique AI features (virtual avatars, smart editing, auto-captions), showcasing AI's potential in content creation. Day.ai, founded by a former HubSpot CPO, aims to build an AI-native CRM, addressing traditional CRM pain points through automatic data extraction and analysis to boost sales efficiency.

- Continued Exploration of Model Reasoning & Evaluation : Test-Time Scaling (TTS) was systematically reviewed as an effective method for enhancing model inference capabilities, with a four-dimensional analysis framework proposed. Midjourney released V7 Alpha; while showing improvements in image quality and personalization, in-depth reviews noted it still lags behind models like GPT-4o in prompt adherence and text rendering, offering direct comparisons.

- Industry Reports Reveal Macro Landscape & Trends : Stanford University's "AI Index Report 2025" provided a comprehensive analysis of AI progress, adoption, the global landscape (narrowing US-China gap, open-source catching up), ethical challenges, and socio-economic impacts. An analysis of 2,443 US AI startups and 802 investors shed light on early-stage funding patterns, industry distribution, and investor preferences.

- Insights from Founders & Industry Leaders Collide : OpenAI CEO Sam Altman acknowledged the validity of early-stage AI startups being "wrappers," predicting AI Agents will transform development workflows. Rabbit founder Jesse Lyu articulated his ambition to reshape operating systems with Agents. Shopify's CEO emphasized the necessity of AI adoption. Duozhuayu founder Mao Zhu shared practical insights on using AI in C2B2C models and reflections on her entrepreneurial journey.

- Expanding AI Application Boundaries & Hardware Considerations : Beyond software, AI is driving hardware thinking. Discussions around the commercial viability of humanoid robots prompted a review of 10 leading companies, analyzing challenges in cost, application scenarios, the necessity of the "humanoid" form factor, and the path from factory floors to homes.

🔍 In summary, this week showcased parallel progress in foundational model innovation and AI Agent ecosystem development. Rapid technological iteration is driving deeper application scenarios in areas like audio/video creation, CRM, and programming, while business model exploration is increasingly active. Concurrently, discussions around technical roadmaps (e.g., MoE vs. other architectures, Agent design philosophies), development strategies (how enterprises embrace AI, startup survival tactics), and broader socio-economic impacts (as highlighted by the Stanford AI Index) continue to intensify. We invite you to click the article links to dive deeper into this week's AI frontiers, and to collectively reflect on and embrace this wave of transformation.