Dear friends,

👋 Welcome to this edition of BestBlogs.dev's curated article selection!

🚀 This issue focuses on the latest developments in artificial intelligence, innovative applications, and business dynamics. Let's dive into the breakthrough progress in AI technology and explore the strategic moves of industry giants and innovative enterprises.

🔥 Breakthrough Progress in AI Models

We spotlight several important AI model updates:

Baidu released ERNIE 4.0 Turbo, highlighting significant improvements in speed and effectiveness.

DeepSeek-Coder-v2 outperformed GPT-4 Turbo in coding capabilities, showcasing the immense potential of open-source models.

Google introduced Gemma 2, an open-source large language model, offering developers a new alternative.

Anthropic launched Claude 3.5 Sonnet, featuring the new Artifact functionality, expanding AI's application scope.

💡 AI Development Tools and Frameworks

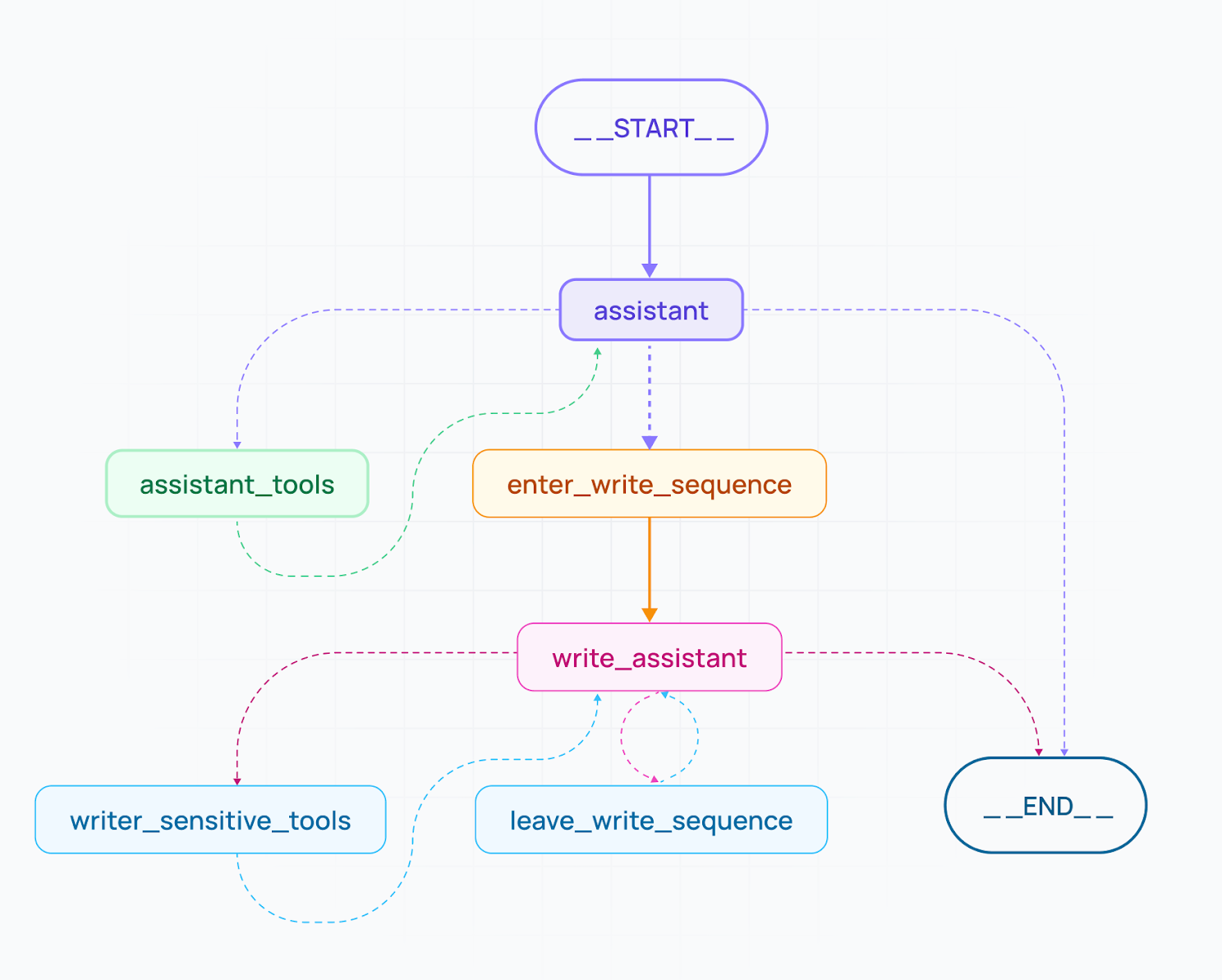

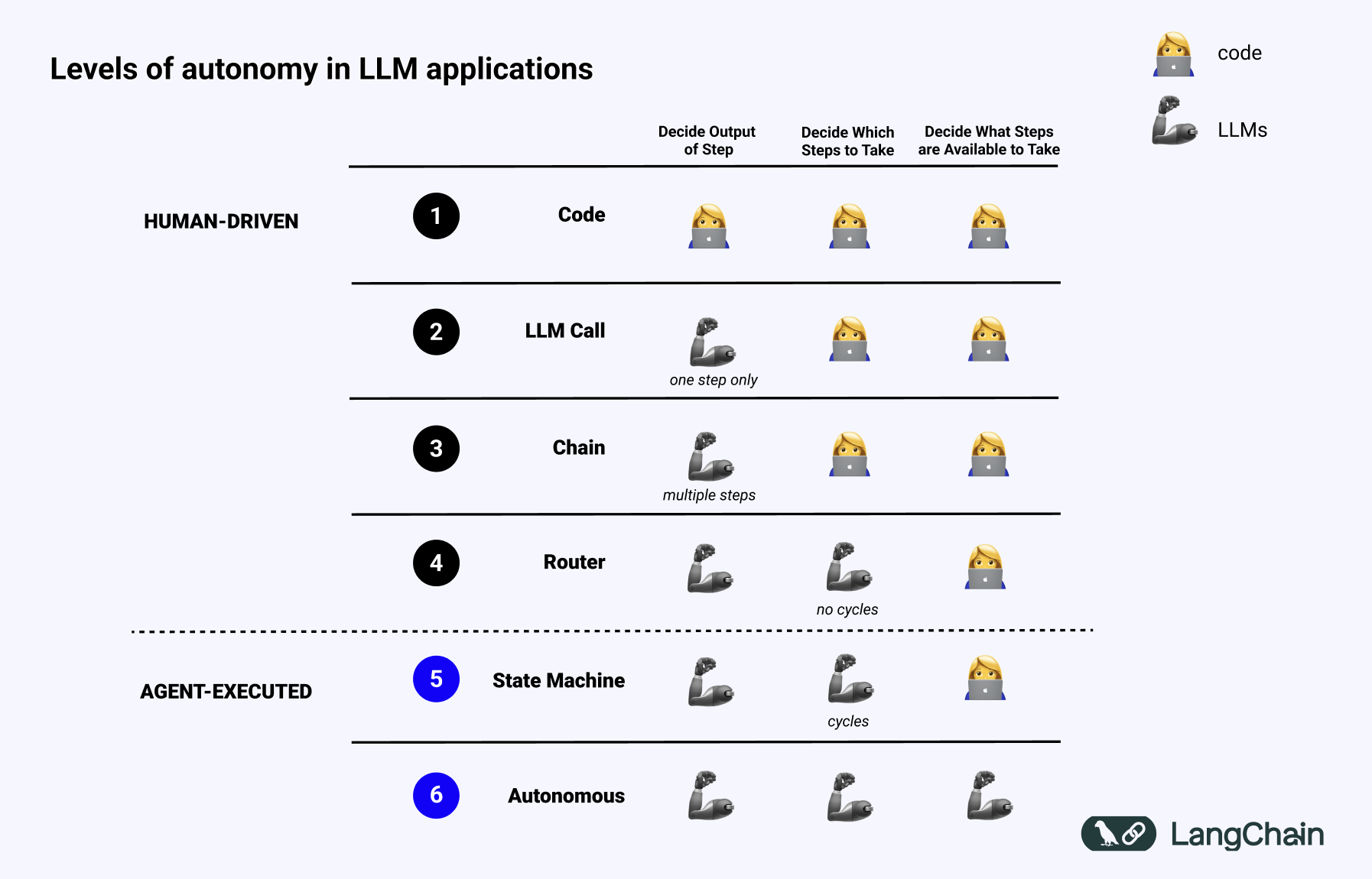

LangChain's introduction of the LangGraph v0.1 framework and LangGraph Cloud service opens new possibilities for building sophisticated AI agent systems. We'll also explore optimizations in RAG (Retrieval-Augmented Generation) methods and several noteworthy AI crawler open-source projects. These tools and methods are crucial for enhancing the performance and practicality of AI applications.

🏢 Innovative AI Applications in Specific Domains

Financial Innovation: In-depth analysis of multi-agent technology applications in finance, discussing improvements in decision-making accuracy and efficiency.

Gaming Industry: Exploring how AI is transforming games into personalized artistic experiences and its impact on game development.

AI Hardware: Focusing on future trends in AI hardware development and discussing support for more complex AI applications.

📊 AI Market Dynamics and Business Strategies

Large Model Market Competition: Analyzing the current price war and various collaboration models.

Platform Roles: Examining how DingTalk and Feishu are attracting large model vendors to build AI ecosystems.

AI Startups: Analyzing the opportunities and challenges facing AI startups, with special attention to the rise of AI search companies like Perplexity.

🔮 Future Outlook for AI

Edge Models: Industry experts predict the development of GPT-4 level edge models by 2026.

AI Application Proliferation: Discussing the potential timeframe and necessary conditions for widespread AI application adoption.

AGI Development: Exploring the development prospects of Artificial General Intelligence (AGI) and its potential impact.

This issue covers the latest advancements in AI technology, innovative applications, and market dynamics, aiming to provide you with comprehensive and in-depth insights into the AI field. Whether you're a developer, product manager, or an AI enthusiast, we believe you'll find valuable information and inspiration here. Let's explore the limitless possibilities of AI technology together!