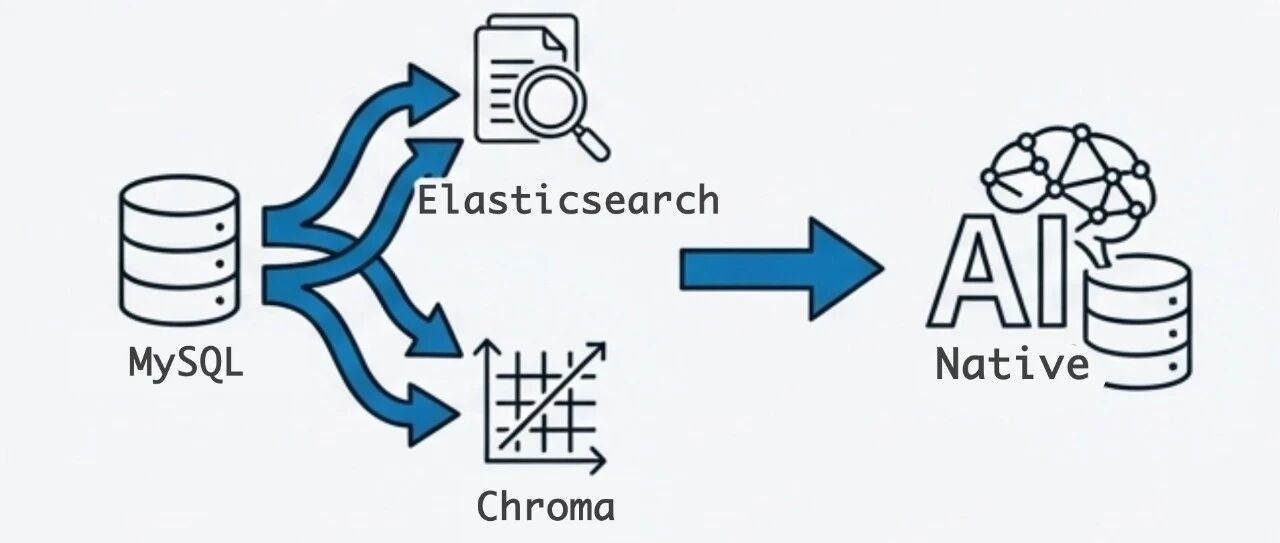

DeepSeek officially released two landmark Large Language Models: DeepSeek-V3.2 and DeepSeek-V3.2-Speciale. DeepSeek-V3.2 aims to balance strong reasoning capabilities with efficient output length, making it particularly suitable for daily Q&A and general Agent tasks. It has reached GPT-5 level in public reasoning benchmarks, while significantly reducing computational costs and user waiting time compared to similar models. DeepSeek-V3.2-Speciale maximizes the reasoning capabilities of open-source models. By combining the theorem proving capabilities of DeepSeek-Math-V2, it performs comparably to Gemini-3.0-Pro on mathematics, code, and general domain evaluation sets, and has won multiple international Olympiad gold medals. The article emphasizes an innovation of DeepSeek-V3.2: the first deep integration of thinking mode with tool calling. Through a large-scale Agent training data synthesis method, the model's generalization ability on complex tasks has been greatly improved, enabling it to reach the highest level of open-source models in Agent evaluations. Both models are open-source and provided services through the official web interface, App, and API. V3.2-Speciale also provides temporary API services for community evaluation and research.