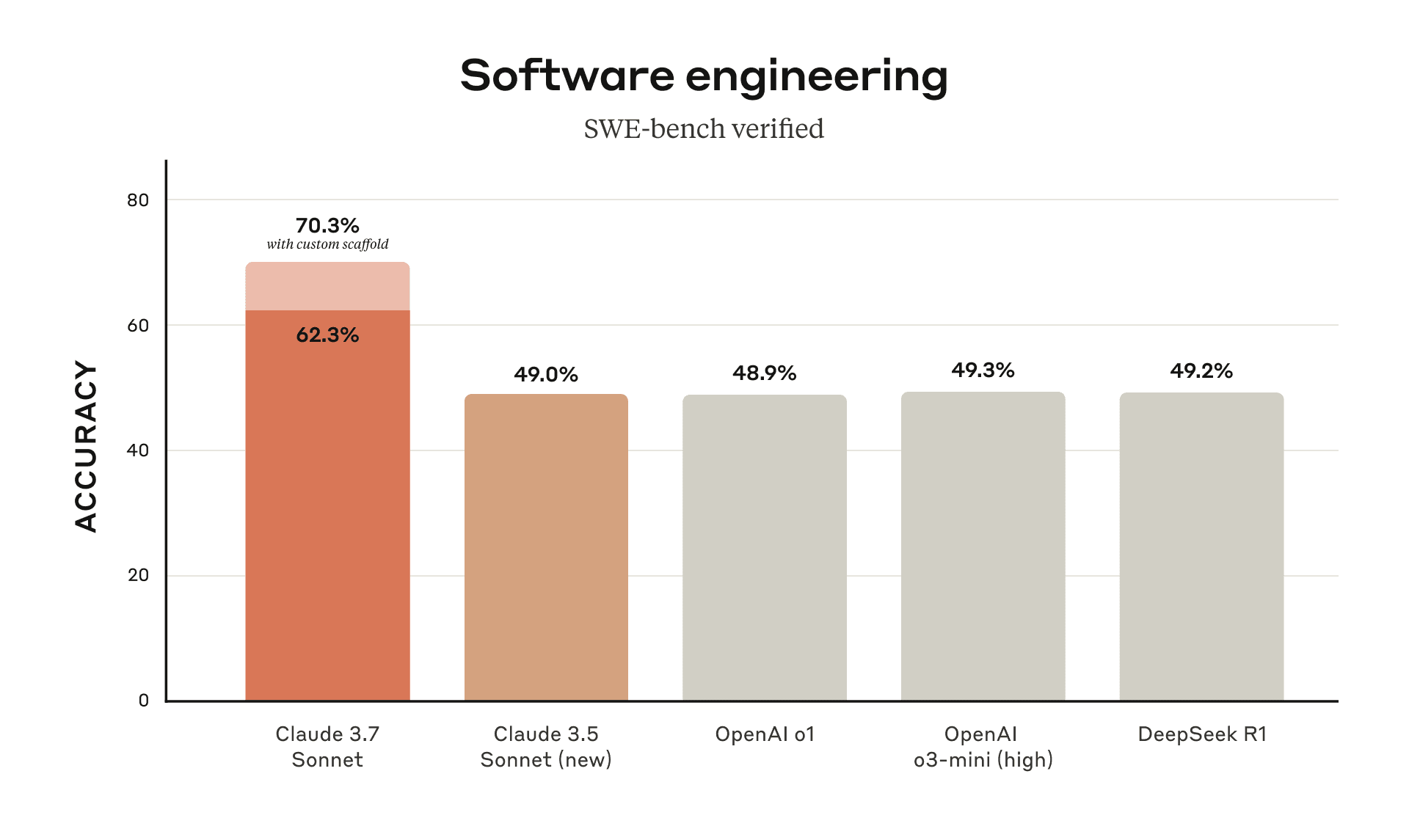

Anthropic has released Claude 3.7 Sonnet, a hybrid reasoning model that offers both rapid response and step-by-step reasoning, improving performance on tasks such as mathematics, physics, and programming. Its hybrid reasoning capabilities allow the model to respond quickly in standard mode and engage in deeper, self-reflective reasoning in extended thinking mode. Additionally, Claude Code, an agentic programming command-line tool, has been introduced as an active collaboration partner, capable of searching code, editing files, writing tests, and submitting code. For example, in early testing, Claude Code was able to complete tasks in a single operation that would have previously taken over 45 minutes of manual work. Claude 3.7 Sonnet has achieved leading performance in both SWE-bench Verified and TAU-bench tests. Furthermore, GitHub integration is now available in all Claude subscription plans, facilitating the connection of code repositories to Claude for developers. Anthropic has conducted extensive testing and evaluation to ensure it meets standards in terms of safety, reliability, and stability.