AK

@_akhaliq · 6h agoViSAudio

End-to-End Video-Driven Binaural Spatial Audio Generation

01:59

2

6

31

14

8

ViSAudio

End-to-End Video-Driven Binaural Spatial Audio Generation

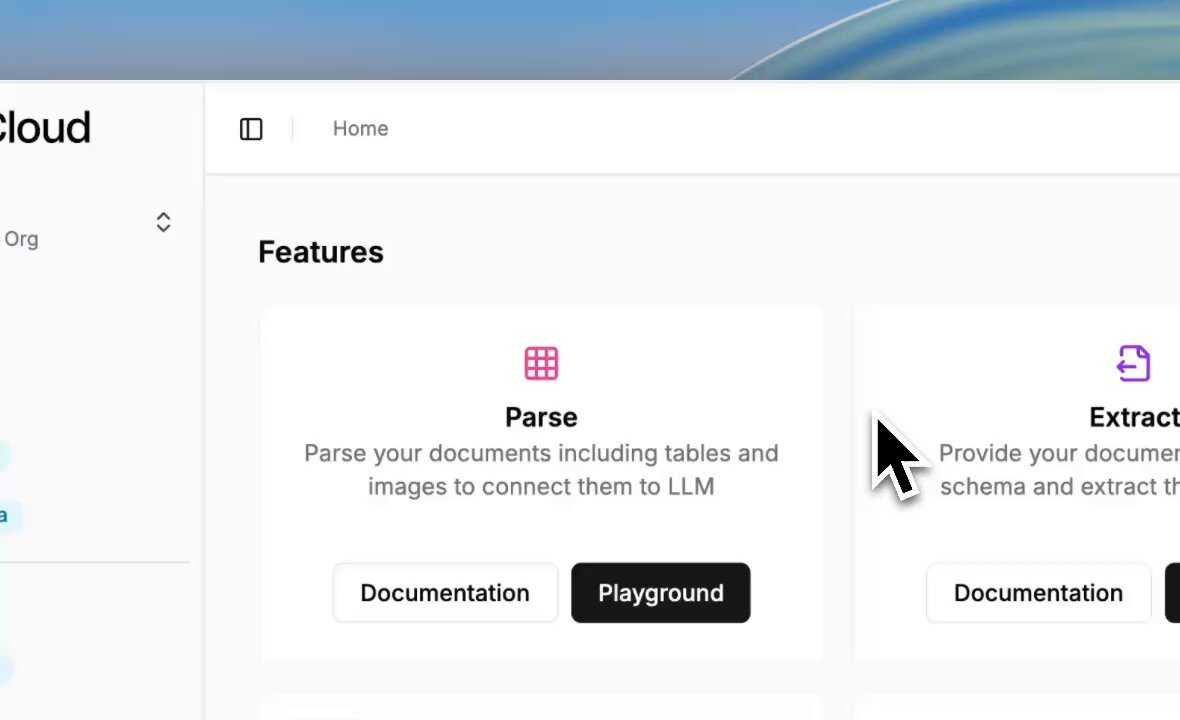

Deploy production-ready agent workflows with just one click from LlamaCloud. Here's us deploying the SEC filling extract and review agent!

Our new Click-to-Deploy feature lets you build and deploy complete document processing pipelines without touching the command line:

🚀 Choose from pre-built starter templates like SEC financial analysis and invoice-contract matching workflows

⚡ Configure secrets and deploy in under 3 minutes with automatic building and hosting

🔧 Full customization through GitHub - fork templates and modify workflows, UI, and configuration

📊 Built-in web interfaces for document upload, data extraction review, and result validation

Each template covers real-world use cases combining LlamaCloud's Parse, Extract, and Classify services into complete multi-step pipelines. Perfect for getting production workflows running quickly, then customizing as needed.

Try Click-to-Deploy in betdevelopers.llamaindex.ai/python/llamaag…8xn

动态生成游戏画面,挺酷的👍

I've been working on Dramamancer -- AI game engine for interactive story games. 🥳

As author, you set key story beats for the AI narrator to improvise from. As player, you explore by typing what you want to do🎮

Want to try?storytelling-dev.midjourney.comZx

𝐈𝐬 𝐌𝐂𝐏 𝐀𝐥𝐫𝐞𝐚𝐝𝐲 𝐃𝐞𝐚𝐝? 𝐍𝐨𝐭 𝐒𝐨 𝐅𝐚𝐬𝐭. 🚀

The AI community is divided: some say Anthropic's new Skills feature makes MCP obsolete. Others argue that MCP is the future of AI tool integration.

The truth? They're complementary, not competing.

𝐒𝐤𝐢𝐥𝐥𝐬 = The brain. They encapsulate domain expertise and best practices, keeping AI agents focused even in long conversations.

𝐌𝐂𝐏 = The hands. It provides actual capabilities—reading files, calling APIs, and accessing databases like Milvus.

Think of it this way: Skills tell your AI agent what to do and how to think. MCP gives it the tools actually to do it.

𝐌𝐢𝐥𝐯𝐮𝐬 𝐬𝐡𝐨𝐰𝐬 𝐡𝐨𝐰 𝐭𝐡𝐢𝐬 𝐰𝐨𝐫𝐤𝐬 𝐢𝐧 𝐩𝐫𝐚𝐜𝐭𝐢𝐜𝐞:

🔷 Skills: Provide specialized knowledge on vector search strategies and Milvus best practices

🔷 MCP: Handle actual database connections and operations

🔷 Together: Enable querying collections, milvus.io/blog/is-mcp-al…d managing data retrieval seamlessly

Know more:https://t.co/fOEcqsT4oI

———

👉 Follow @milvusio, for everything related to unstructured data!

这里面有个有意思的细节,就是 Gemini 是能知道你的位置的,所以这个配图上的灯塔,就是我所在 City Evanston 标志性的灯塔,紫色帽子和N,是 Northwestern University,离我家不远

Speed up product experimentation and feature rollouts with @Amplitude_HQ Experiment Implementation. 📈 This custom agent will:

• generate experiment scaffolding

• insert clean, consistent event tracking

• map variations to your product logic

• ensure your data flows correctly into Amplitude

However, the prompt I originally wrote for GPT-4o image didn’t produce great results when generating on Nano Banana Pro, so I’ve been repeatedly tweaking the keywords to get better outcomes. Here are some of the failed versions I went through.

错误解读原文这个通常是模型不行或者原文写的不好

遗漏多半是输入内容太多

x.com/imxiaohu/statu…

如果借助程序翻译可以这样避免:

1. 将输入内容变成 html,为每一块加上id,比如

(<h1 id="title1"> title </h1>

<p id="p1"> paragraph1 <p1>

<ol id="list1">

<li id="list1-item1">item1</li>

</ol>

)

2. 让它翻译时保持HTML结构不变

3. 翻译后检查所有id看有无遗漏,然后标记

但不是很必要,人工一下是最好的

Congratulations to @deepseek_ai - read more about the models below, including a detailed research paper. Weights continue to be MIT licensed!

🚀 Launching DeepSeek-V3.2 & DeepSeek-V3.2-Speciale — Reasoning-first models built for agents!

🔹 DeepSeek-V3.2: Official successor to V3.2-Exp. Now live on App, Web & API.

🔹 DeepSeek-V3.2-Speciale: Pushing the boundaries of reasoning capabilities. API-only for now.

📄 Tech repohuggingface.co/deepseek-ai/De…NuG0

1/n