Dear friends,

👋 Welcome to this issue's curated article selection from BestBlogs.dev!

🚀 In this edition, we dive into the latest breakthroughs, innovative applications, and industry dynamics in artificial intelligence. From model advancements to development tools, from cross-industry applications to market strategies, we've handpicked the most valuable content to keep you at the forefront of AI developments.

🔥 AI Models: Breakthrough Advancements

1.Google Gemini 1.5 Pro: 2-million token context window with code execution capability

2.Alibaba's FunAudioLLM: Open-source speech model enhancing natural voice interactions

3.Meta's Chameleon: Multimodal AI model outperforming GPT-4 in certain tasks

4.Moshi: Native multimodal AI model by France's Kyutai lab, approaching GPT-4o level

5.ByteDance's Seed-TTS: High-quality speech generation model mimicking human speech

💡 AI Development: Tools, Frameworks, and RAG Technology Innovations

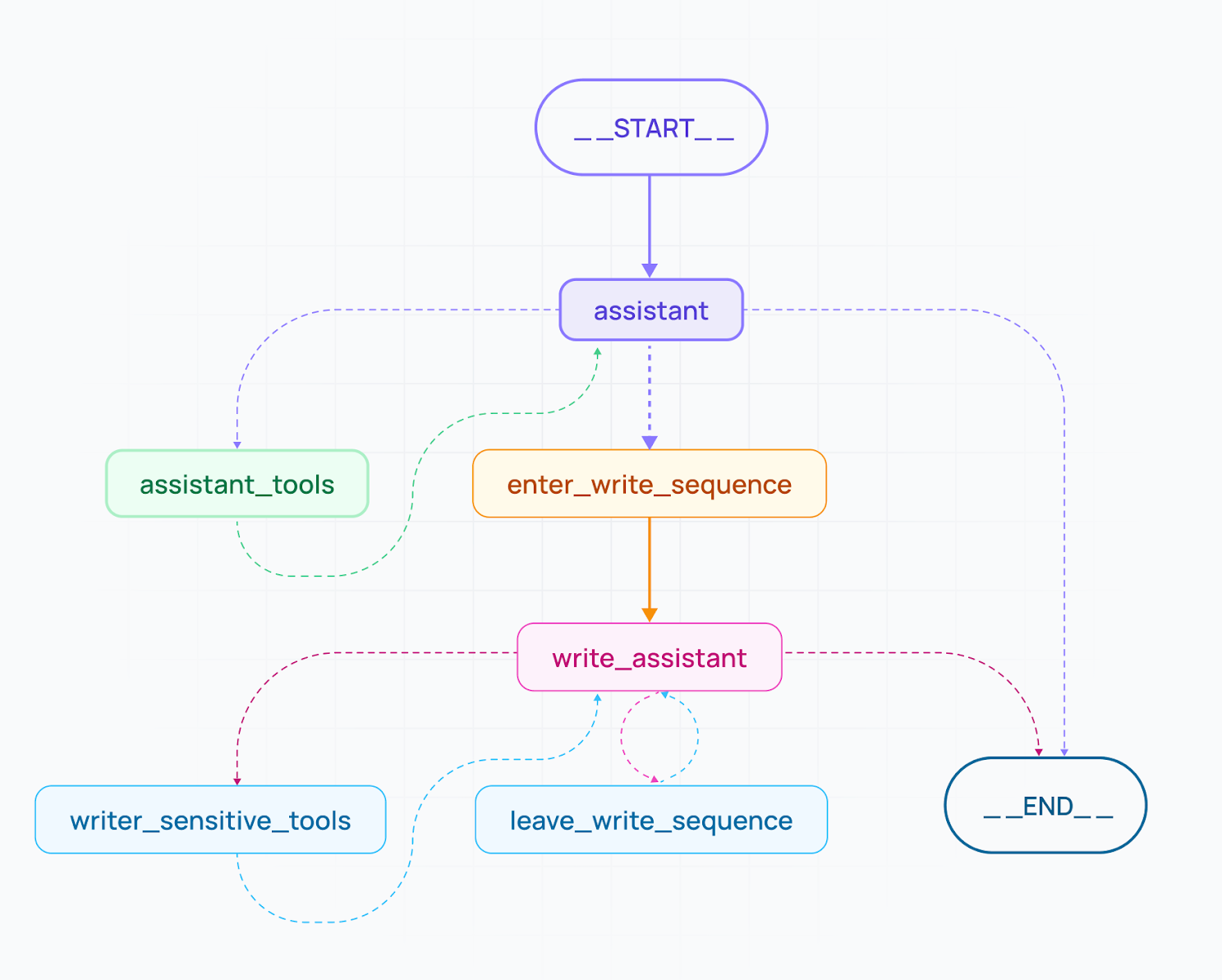

1.LangGraph v0.1 and Cloud: Paving the way for complex AI agent system development

2.GraphRAG: Microsoft's open-source tool for complex data discovery and knowledge graph construction

3.Kimi's context caching technology: Significantly reducing costs and response times for long-text large models

4.Jina Reranker v2: Neural reranker for agent RAG, supporting multilingual and code search

5.Ant Group's Graph RAG framework: Enhancing Q&A quality with knowledge graph technology

🏢 AI Products: Cross-industry Applications

1.Tencent Yuanbao AI Search upgrade: Introducing deep search mode for structured, rich responses

2.ThinkAny: AI search engine leveraging RAG technology, developed by an independent developer

3.Replika: AI emotional companion using memory functions for deep emotional connections

4.AI in sign language translation: Signapse, PopSign improving life for the hearing impaired

5.Agent Cloud and Google Sheets for building RAG chatbots: Detailed implementation guide

📊 AI News: Market Dynamics and Future Outlook

1.Bill Gates interview: AI set to dominate fields like synthetic biology and robotics

2.Tencent's Tang Daosheng on AI strategy: AI beyond large models, focusing on comprehensive layout and industrial applications

3.Gartner research: Four key capabilities for AIGC to realize value in enterprises

4.AI product pricing strategies: Examining nine business models including SaaS and transaction matching

5.Why this wave of AI entrepreneurship is still worth pursuing: Exploring how AI technology changes supply and creates new market opportunities

This issue covers cutting-edge AI technologies, innovative applications, and market insights, providing a comprehensive and in-depth industry perspective for developers, product managers, and AI enthusiasts. We've paid special attention to RAG technology's practical experiences and latest developments, offering valuable technical references. Whether you're a technical expert or a business decision-maker, you'll find key information here to grasp the direction of AI development. Let's explore the limitless possibilities of AI together and co-create an intelligent future!