Dear friends,

👋 Welcome to this edition of curated articles from BestBlogs.dev!

🚀 In this issue, we dive into the latest breakthroughs, innovative applications, and industry dynamics in the AI field. We'll explore cutting-edge developments in model advancements, development tools, product innovations, and market strategies. Join us on this exciting journey through the frontiers of AI!

🧠 AI Models & Technology: Architectural Innovation, Performance Leaps

- Jamba 1.5, based on the Mamba architecture, significantly enhances long-context processing, ushering in a new era for non-Transformer models.

- Meta's Transfusion model, fusing Transformer and Diffusion technologies, achieves breakthrough progress in multimodal AI.

- Open-source models like Gemma 2 and GLM-4-Flash promote AI accessibility, lowering entry barriers and offering comprehensive training and fine-tuning guides.

⚙️ AI Development & Tools: Efficiency Boost, Application Expansion

- In-depth analysis of RAG system optimization techniques enhances unstructured data processing, featuring innovative "late chunking" technology.

- Google Cloud showcases Imagen 3 on Vertex AI, advancing high-quality visual content generation and multimodal search system construction.

- Detailed explanations of AI Agent design patterns (like ReAct) and unified tool usage APIs facilitate the development of smarter, more efficient AI systems.

💡 AI Products & Applications: Creative Spark, Business Innovation

- Ideogram 2.0 and CapCut make significant strides in image generation and video editing, showcasing the vast potential of AI creative tools in global markets.

- AI-assisted programming tools (like Cursor AI) attract major investments, highlighting the market's strong demand for enhanced development efficiency.

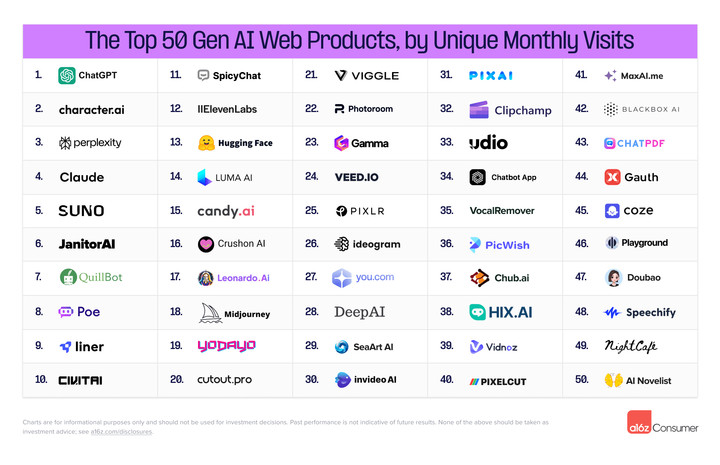

- Analysis of pricing models across 40 leading AI products reveals emerging trends in AI commercialization, exploring innovative business models that blend B2C and B2B approaches.

🌐 AI Industry Dynamics: Shared Insights, Future Outlook

- Experts including Li Mu, Demis Hassabis, and Zhang Hongjiang discuss AI technology trends and challenges, focusing on large model scaling, efficiency, and multimodal AI development.

- Mark Zuckerberg and a16z analyze AI's profound impact on industries, predicting software industry restructuring and the acceleration of "software becoming labor."

- Thought leaders like Andrew Ng explore AI ethics, employment impacts, and AGI development prospects, while comparing AI development paths in China and the US, offering insights into global AI innovation and investment landscapes.

Intrigued by these exciting AI developments? Click through to read the full articles and explore more fascinating content!