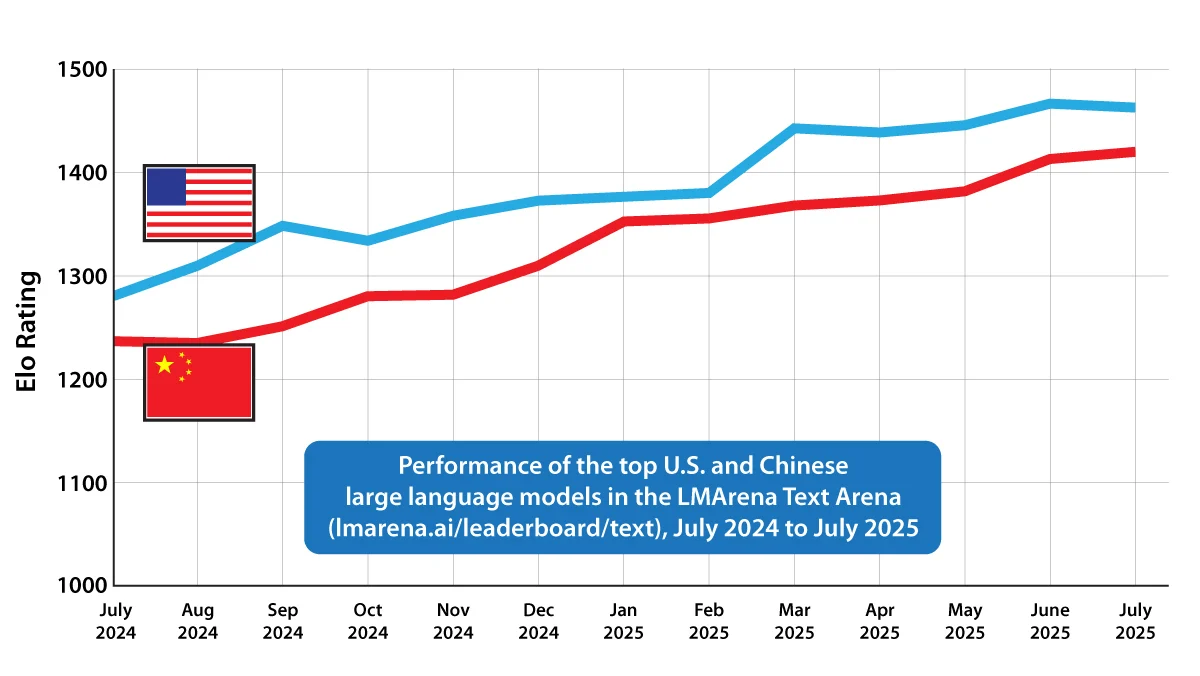

The article reports that Qwen open-sourced three significant models in quick succession: Qwen3-235B-A22B-Thinking-2507 (reasoning model), Qwen3-235B-A22B-Instruct-2507 (basic model), and Qwen3-Coder (programming model). These models have achieved global open-source SOTA in their respective fields. Among them, the new Qwen3 reasoning model (Thinking Edition) not only significantly improves performance in logical reasoning, mathematics, science, and coding tasks, supports 256K native context, and surpasses OpenAI o4-mini in the challenging 'final exam for humanity' benchmark, but also solves complex problems by demonstrating detailed 'thinking processes,' highlighting its innovative advantages. Qwen3-Coder has even surpassed closed-source models such as Gemini-2.5 Pro in programming benchmarks like LiveCodeBench and CFEval. The article emphasizes China's rapid advancement and leadership in open-source LLMs, pointing out that Alibaba's Tongyi Large Language Model has become the leading open-source model family.