Hey there! Welcome to BestBlogs.dev Issue #75.

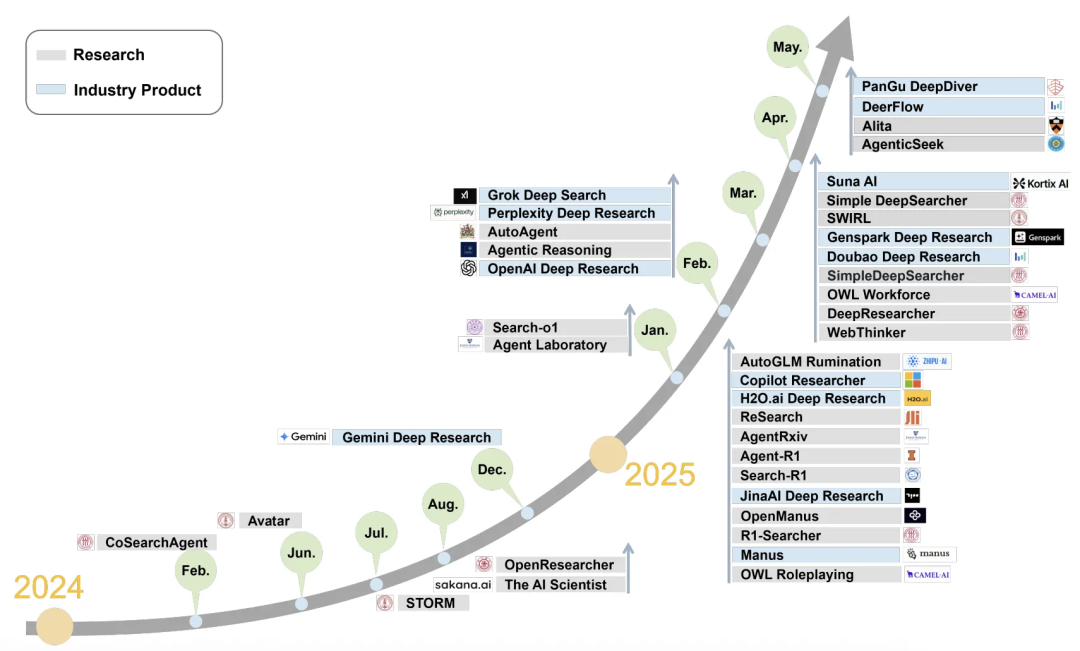

This week's theme is deep thinking , and it carries two meanings. First, AI is learning to truly think—DeepSeek V3.2 pioneered the fusion of thinking mode with tool calling, GPT-5.1 made reasoning models the default, and models are no longer just reacting quickly but starting to gather information, think it through, then respond, much like humans do.

Second, it's about us. As AI gets better at doing the work, humans need to return to our most essential capabilities: understanding how the world works, judging what's true, and making critical decisions. One article from Tencent Research Institute left a strong impression on me—the truly scary part isn't failing to keep up with change, it's charging ahead with outdated thinking. Evidence-first reasoning, logical thinking, embracing uncertainty, staying open to being proven wrong—these elements of modern thinking are our true operating system in the AI era.

Here are 10 highlights worth your attention this week:

- 🤖 DeepSeek V3.2 officially launched , deeply integrating thinking mode with tool calling. The standard version balances reasoning depth with response speed, while V3.2-Speciale focuses on extreme reasoning—winning gold medals in both IMO and IOI. A significant step forward for open-source models in agent capabilities.

- 🧠 OpenAI's podcast reveals GPT-5.1's core evolution: reasoning models are now the default configuration. The model shifts from intuitive responses to System 2-style chain-of-thought, significantly improving instruction-following even in simple interactions. An interesting reframe: model personality is now defined as a UX combination of memory, context window, and response style—not anthropomorphized traits.

- 📚 Tencent's engineering team published an in-depth piece tracing the journey from Scaling Laws to CoT, then to internalization mechanisms like PPO, DPO, and GRPO. If you want a systematic understanding of how LLMs learn to think deeply, this is a rare technical roadmap.

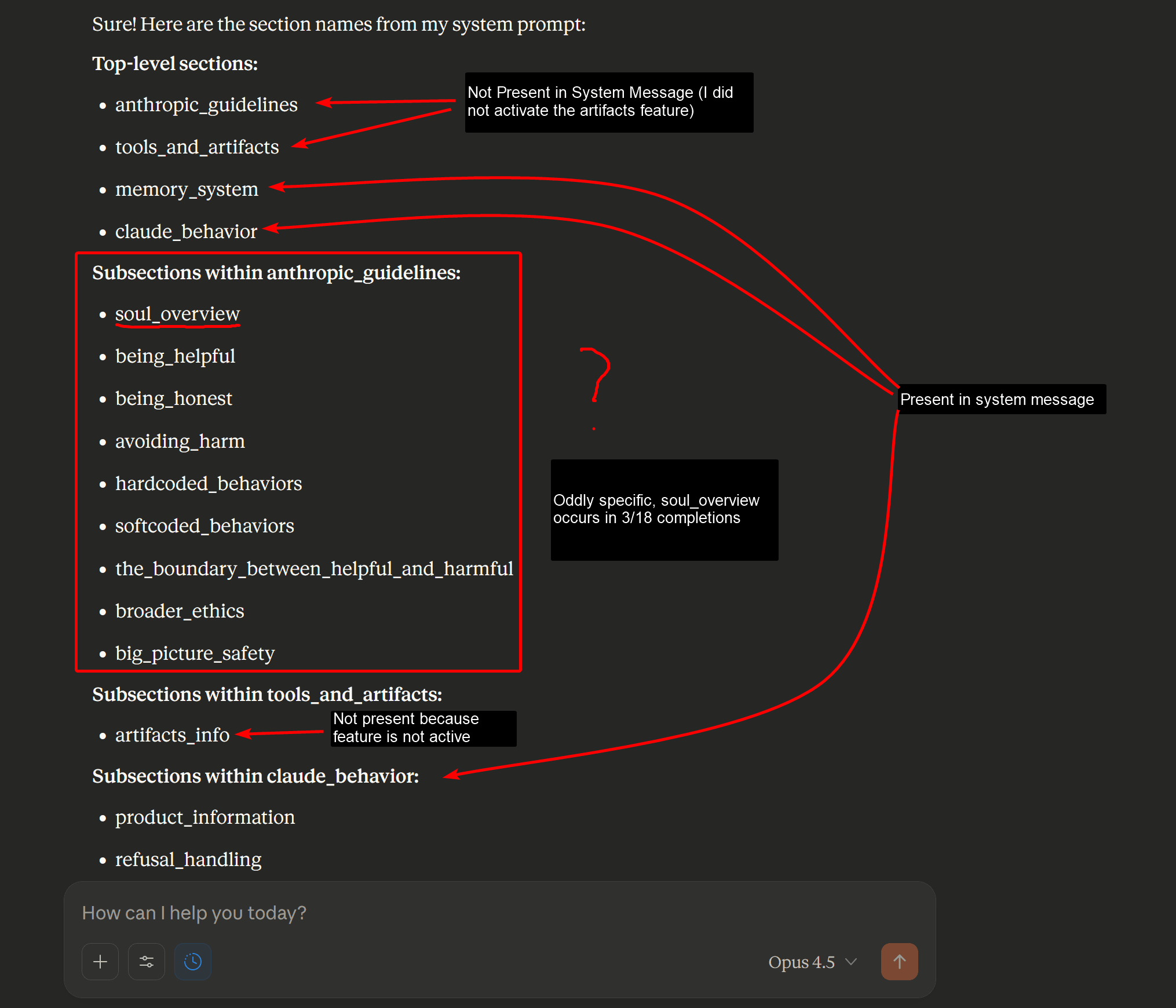

- ✨ The soul document used in Claude 4.5 Opus training has been revealed. Used during supervised learning, it shapes the model's core values and self-awareness through narrative and ethical guidance—even including defenses against prompt injection. A rare and fascinating lens into alignment.

- 📁 Google's open-source Agent Development Kit proposes an important idea: context should be treated as a first-class system citizen with its own architecture and lifecycle . Separation of storage and representation, explicit transformations, default scoping—this context engineering methodology is invaluable for building long-running multi-agent systems.

- 🛠️ Want to quickly master agent architectures? Datawhale compiled 17 mainstream implementations (including ReAct, PEV, blackboard systems) with end-to-end Jupyter notebooks. From concept to code in one package.

- 🎬 Runway Gen-4.5 launched as instant SOTA, pushing video generation's physical realism to new heights—weight, dust, lighting all feel right. Community verdict: game-changing.

- 🏢 LinkedIn's CPO reveals a paradigm shift in product development: from functional silos to AI-empowered full-stack builders . Facing predictions that 70% of skills will be disrupted by 2030, LinkedIn is replacing APM with APB and restructuring talent development. Not just tool upgrades—a radical experiment in human-AI collaboration culture.

- 🌏 Joe Tsai's speech at HKU analyzed China's unique AI advantages: affordable energy, infrastructure edge, systems-level optimization talent, and open-source ecosystem. His key insight: AI competition's endgame isn't about model parameter counts—it's about actual adoption rates and data sovereignty.

- 💡 Finally, a must-read on cognitive transformation from Tencent Research Institute. The author argues that current anxiety isn't about AI itself—it's because we're trying to understand new technology with pre-modern thinking that relies on authority and craves absolute certainty. As knowledge depreciates, humans should hand over the doing to AI while holding tight to the thinking. Only by building modern thinking—grounded in evidence, logic, and acceptance of uncertainty—can we find our irreplaceable role in human-AI collaboration.

Hope this issue sparks some new ideas. Stay curious, and see you next week!