Hey there! Welcome to BestBlogs.dev Issue #77.

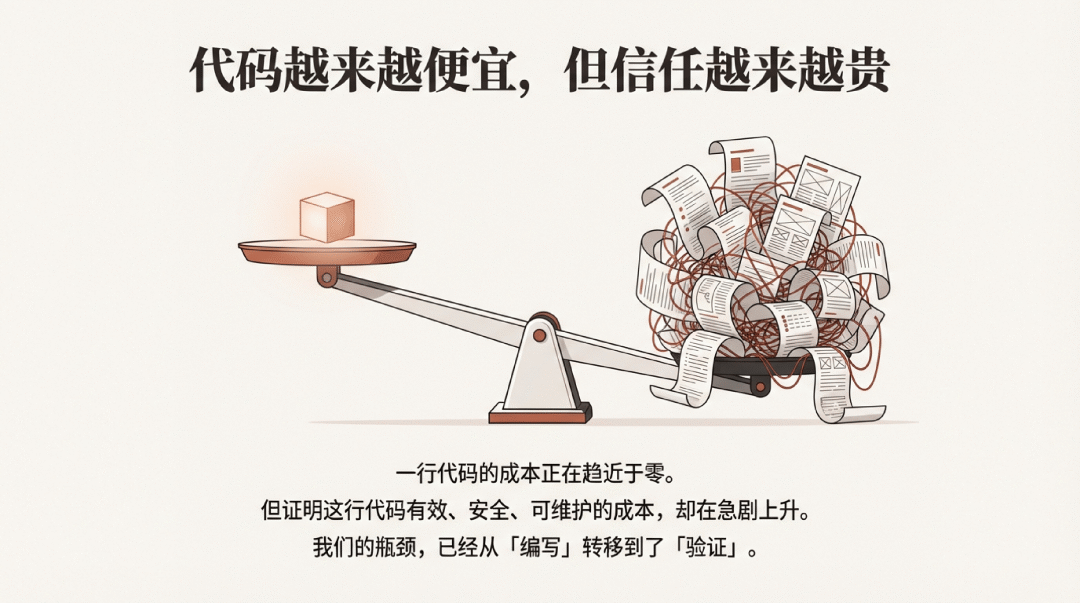

This week's theme is Vibe Engineering . Simon Willison captured it perfectly with his JustHTML project: "The agent types, I think." It's not about throwing code at AI and praying the tests pass—it's about engineers taking responsibility for every line while leveraging AI agents at every step. From OpenAI's internal 92% Codex adoption rate to Every's 99% AI-written codebase, vibe engineering is evolving from buzzword to methodology.

Speaking of practice, I spent this week applying vibe engineering to a major overhaul of BestBlogs.dev's backend — module separation, distributed deployment, database clustering, upgrading from single-server to a scalable distributed architecture. The experience reinforced a key insight: AI dramatically boosts coding efficiency, but the "thinking" parts—architecture decisions, module boundaries, testing strategies—still require human judgment. Deployment coming soon.

Here are 10 highlights worth your attention this week:

🏆 Gemini 3 Flash attempts to break the Pareto frontier of AI models: Pro-level reasoning (90.4% GPQA) with Flash-level latency, hitting 218 tokens/sec throughput. Adjustable thinking levels and context caching make complex agent scenarios far more cost-effective. How will OpenAI respond?

🤖 GPT-5.2 Codex is optimized specifically for agentic coding—better long-context comprehension, reliable large-scale refactoring, and enhanced security capabilities. Early testers say it's expensive but genuinely good. The coding model arms race enters its second half.

🔬 DeepMind CEO Demis Hassabis proposed a core thesis: AGI = 50% scaling + 50% innovation . Data alone won't cut it—we need AlphaGo-style search and planning capabilities. He compared the AI transformation to "the Industrial Revolution at 10x speed" and shared profound insights on post-scarcity economics.

🛠️ Vibe Engineering dominated this week's discourse. OpenAI's internal data shows Codex users produce 70% more PRs; Simon Willison demonstrated 3,000 lines passing 9,200 tests with JustHTML; Kitze distinguished between blindly trusting AI (Vibe Coding) and strategically guiding it (Vibe Engineering); and Taobao's team shared practical SDD implementation experience.

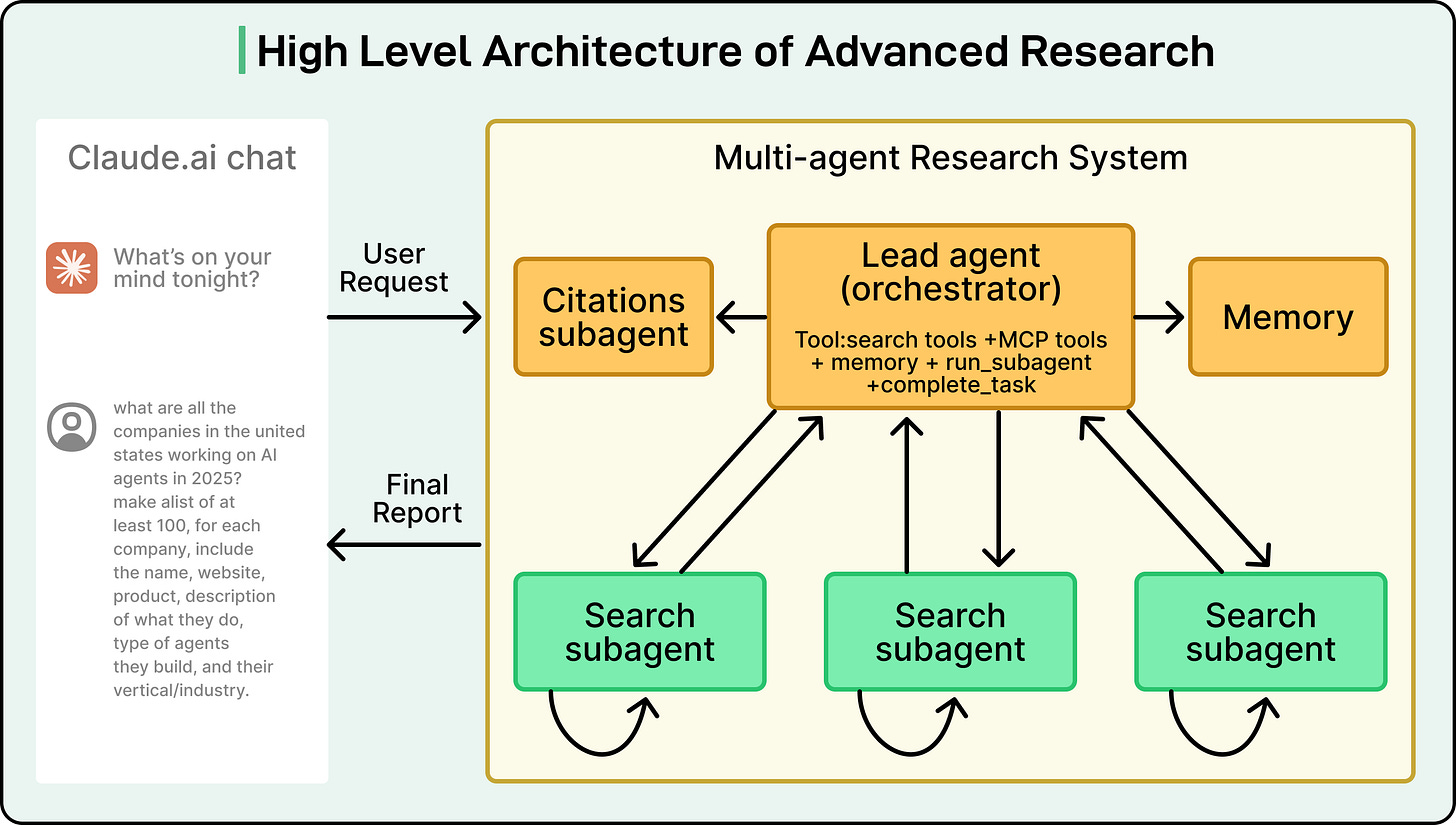

🧩 ByteByteGo systematically deconstructed the Deep Research multi-agent architecture—from orchestrators decomposing tasks to parallel sub-agent retrieval to synthesizing cited reports. The piece also compares implementations across OpenAI, Gemini, Claude, and Perplexity. Essential reading for understanding AI research systems.

📈 Lovable hit $200M ARR in under a year with just 100 people, shattering SaaS growth records. Elena Verna's retrospective reveals the new AI-era growth playbook: 95% innovation investment, aggressive freemium strategy, building in public, and replacing MVP with "Minimum Lovable Product (MLP)."

💡 Every CEO Dan Shipper shared their radical approach to building an AI-native company : 99% of code written by AI agents, with individuals building and maintaining complex production apps solo. The "compound engineering" concept—converting tacit development knowledge into reusable prompt libraries—achieves 10x engineering efficiency gains.

📊 Zhenfund's Dai Yusen defined 2026 as The Year of R : Return (ROI accountability), Research (new paradigms beyond pure scaling), and Remember (personalized memory as the real moat). He warned of potential secondary market corrections and offered a "barbell strategy" for navigating the cycle.

🎨 Image and video generation saw major updates. GPT Image 1.5 significantly improves instruction following and precise local editing; ByteDance's Seedance 1.5 pro achieves audio-video joint generation with native multilingual lip-sync support—marking AI video's leap from visual-only to audiovisual storytelling.

🌐 Anthropic's Interviewer tool conducted deep interviews with 1,250 users, mapping a "human emotional radar." Knowledge workers hide their automation to maintain professional image, creators struggle between efficiency and originality anxiety, scientists withhold core judgment due to reliability concerns. AI research is shifting from technical metrics to deep understanding of human psychology.

Hope this issue sparks some new ideas. Stay curious, and see you next week!

![124. Year-end Review [Beyond 2025]: Discussing 2026 Expectations, The Year of R, Market Correction, and Our Bets with Dai Yusen](https://image.jido.dev/20251214012219_fjs5f3qs2xi9vb47c4k5facqos0k.png)