Hello everyone! Welcome to Issue 78 of BestBlogs.dev's curated AI article recommendations.

This is our final issue of 2025, and this week's theme is "The Infinite Brain."

An article by Notion founder Ivan Zhao provides a fitting conclusion to the year. He frames AI as the third revolutionary force following steam and steel: steam engines expanded the limits of physical labor, steel raised the heights of architecture, and AI is becoming the "infinite brain"—breaking through cognitive boundaries. His core argument: we need to stop viewing AI merely as a "copilot" and start reimagining how we work entirely.

The data backs this up. A survey by Lenny and Figma, based on 1,750 respondents, reveals that over half of professionals save at least half a day per week thanks to AI. Engineers are migrating from GitHub Copilot to Cursor and Claude Code. PMs are using AI to cross-functionally build prototypes. Interestingly, entrepreneurs benefit the most while designers perceive the least value—AI penetration varies significantly by role.

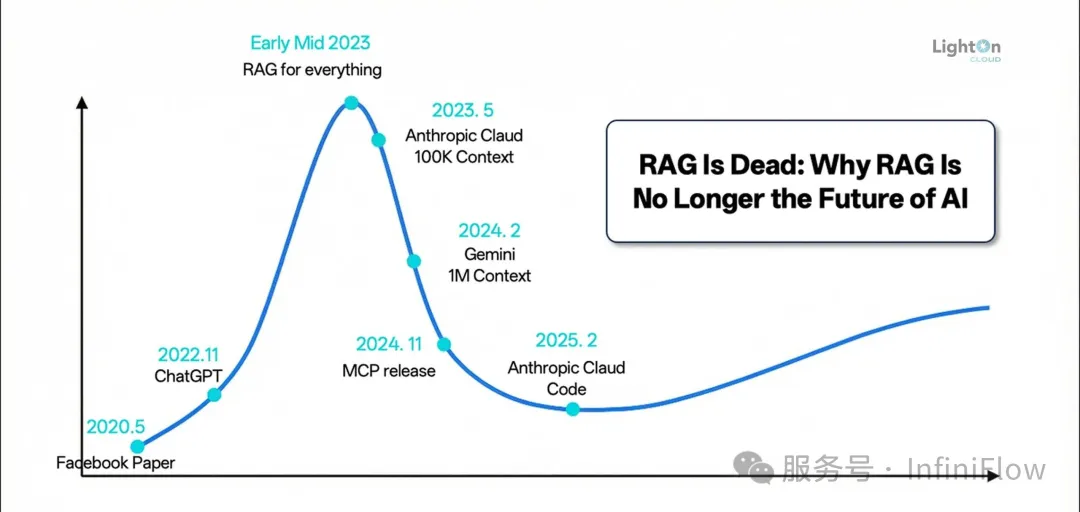

Yet this year also exposed the gap between ambition and reality. Research from Berkeley and DeepMind shows that 68% of Agents are limited to 10 steps or fewer, with multi-agent collaboration suffering from "coordination tax" and error amplification. A candid post-mortem from a frontend team put it bluntly: technical success doesn't equal product success. The 80/20 bottleneck—where Agents handle 80% but the final 20% requires manual fixes—meant users preferred doing things themselves. Their conclusion: "Skills over standalone Agents"—integrate capabilities into general-purpose tools rather than building yet another wheel.

Perhaps this captures 2025's true story: AI is indeed reshaping how we work, but the process is messier, more pragmatic, and demands more patience than we imagined. As we shift from "what AI can do" hype to "how AI should be used" in practice, the real transformation is just beginning.

Here are this week's 10 highlights worth your attention:

🧠 Notion founder Ivan Zhao interprets AI transformation through a historical lens, framing it as "The Infinite Brain." At the individual level, programmers leap from 10x to 30-40x productivity; at the organizational level, AI breaks through traditional communication bottlenecks; at the economic level, knowledge economies will evolve from "Florence" to "Tokyo"-scale megacities. The takeaway: stop treating AI as copilot—reimagine work itself.

📊 The AI Workplace Companion Survey by Lenny and Figma, based on 1,750 samples, reveals AI's real ROI: over half of professionals save at least half a day weekly. Entrepreneurs benefit most; designers perceive the least. Engineers are shifting from Copilot to Cursor and Claude Code. The opportunity frontier is migrating from content production toward strategic thinking.

🤖 Three papers lay bare the Year One struggles of Agents : 68% are limited to 10 steps, multi-agent setups face coordination tax and error amplification, and throwing more compute doesn't linearly improve performance. Real breakthroughs require systematic evolution in tool management, verification capabilities, and communication protocols. Essential reading for Agent teams.

💡 A candid post-mortem from a frontend team: technical success, product failure. User habit resistance, the 80/20 bottleneck, and workflow fragmentation led to zero adoption post-launch. Key lesson: technical success ≠ product success. Skills integrated into general tools beat standalone Agents. Hard-won lessons are the most valuable.

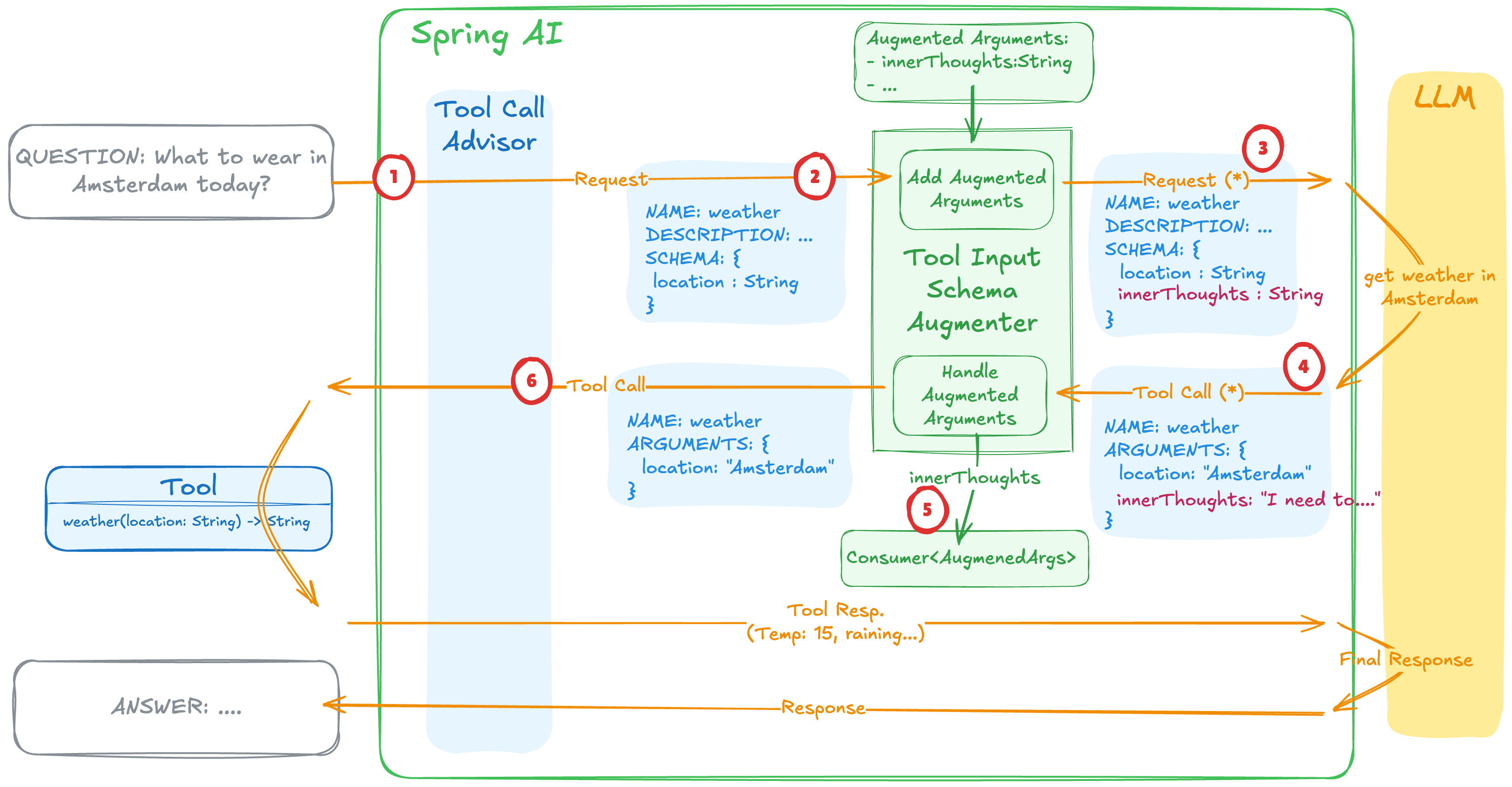

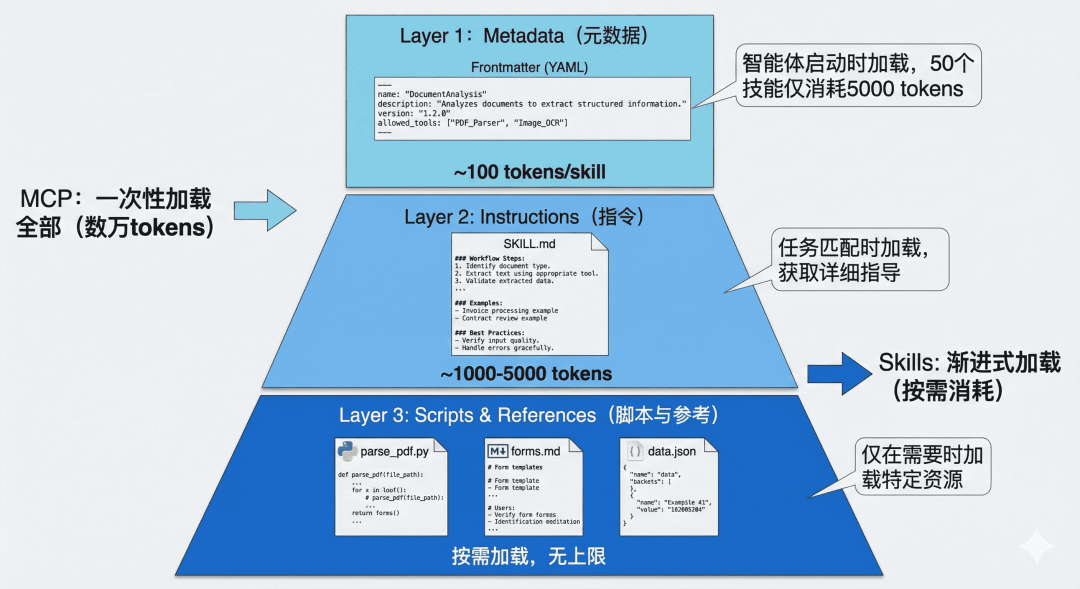

🔧 A detailed comparison of MCP vs. Agent Skills : MCP solves connectivity; Skills encapsulate domain knowledge and operational workflows. Skills' "progressive disclosure" mechanism uses a three-layer architecture to load information on-demand, effectively mitigating context explosion. The proposed MCP + Skills hybrid architecture is an important reference for Agent development.

📈 LangChain's annual report shows 57% of enterprises have deployed Agents in production. Customer service and R&D analysis are the two dominant use cases; the biggest challenge is output quality, not cost. Observability tracking is now standard; multi-model hybrid architectures are trending. Data-backed industry baselines.

🎯 Three Gemini co-leads at Google DeepMind in a rare joint interview: Flash now matches previous-gen Pro performance; Pro's main role has become distilling Flash. Post-training is the biggest breakthrough opportunity; latency and speed are severely undervalued. Code, reasoning, and math are largely "solved"—next up: open-ended tasks and continuous learning.

🚀 Major updates from Chinese open-source models this week. Zhipu's GLM-4.7 achieves open-source SOTA in coding—73.8% on SWE-bench, outperforming GPT-5.2 on Code Arena blind tests. MiniMax's M2.1 targets multi-language programming, surpassing Claude Sonnet 4.5 in tests while open-sourcing the new VIBE full-stack benchmark.

🎤 Tongyi open-sources Fun-Audio-Chat 8B , an end-to-end voice model that bypasses the traditional ASR+LLM+TTS pipeline for lower latency. Highlights include emotion perception and Speech Function Call support—executing complex tasks through natural voice. Weights and code fully available.

🌐 Y Combinator partners review 2025's five AI surprises : YC startups' model preference has shifted from OpenAI to Anthropic; startups are achieving arbitrage through model orchestration layers; the single-person unicorn remains unrealized. A separate year-end dialogue offers a bolder thesis: this isn't an AI bubble—it's AI War. Online Learning will become the third paradigm-shifting breakthrough.

In 2025, AI evolved from tool to partner—and we're still learning how to work alongside it. Thank you for being with us this year. Stay curious, and see you in 2026!

![[Introduction to Generative AI & Machine Learning 2025] Lecture 10: History of Speech Language Model Development (Historical Review; 2025 Technology from 1:42:00)](https://image.jido.dev/20251223230942_hqdefault.jpg)

![[Morning Brief] Who Is Your AI Workplace Partner? This Data Has the Answer](https://image.jido.dev/20251226042700_f7a6ffb8)