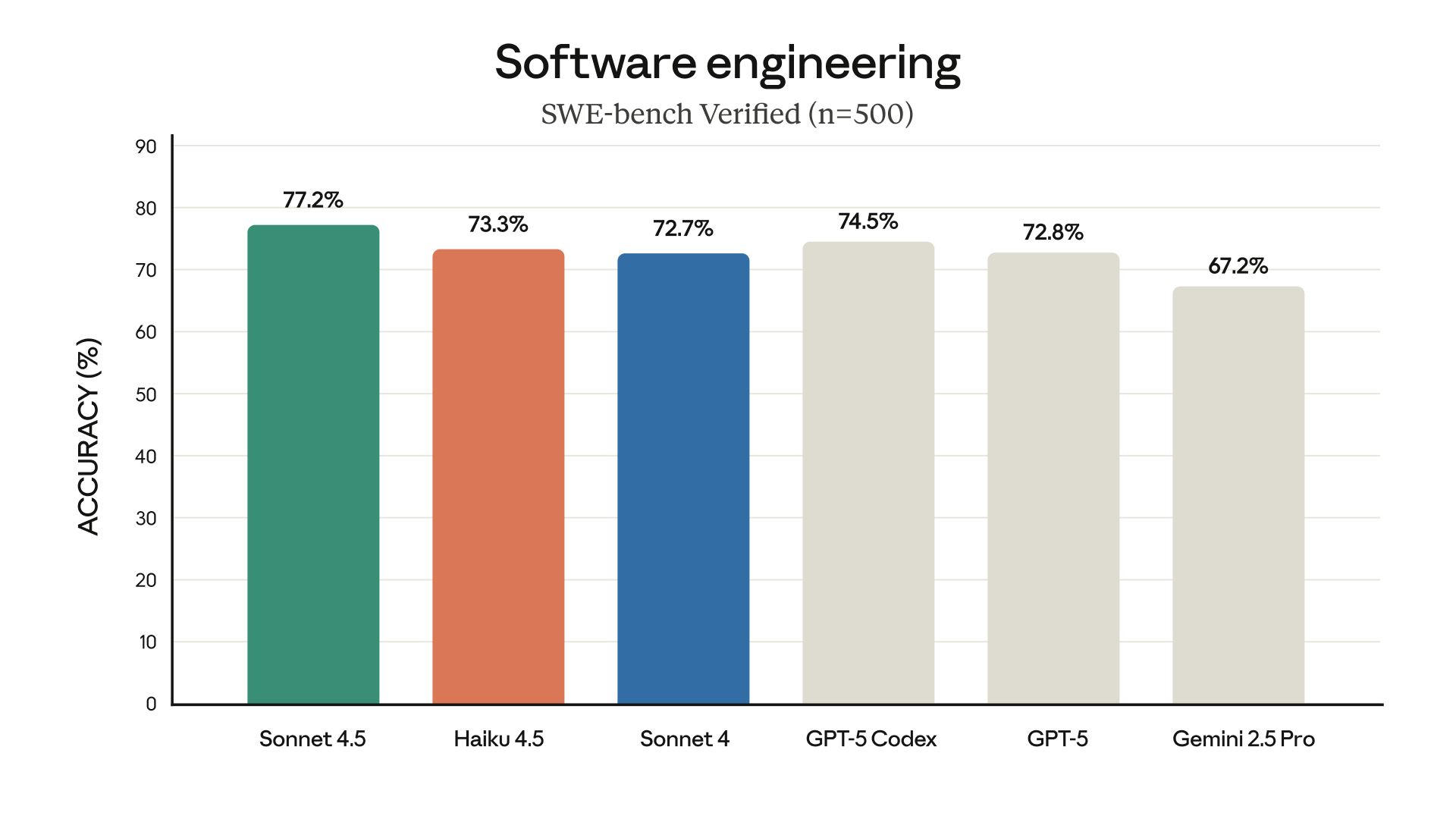

Anthropic has released Claude Haiku 4.5, their latest small model, which achieves near state-of-the-art coding performance comparable to Claude Sonnet 4, but at one-third the cost and more than twice the speed. This advancement makes high-intelligence AI more accessible and efficient for a wide range of applications, particularly those requiring real-time, low-latency responses such as chat assistants, customer service agents, and pair programming. Haiku 4.5 also excels in agentic coding tasks and computer use, enabling more responsive multi-agent projects and rapid prototyping. It complements the frontier model, Claude Sonnet 4.5, by offering a cost-effective option for subtask completion in orchestrated multi-model workflows. Furthermore, Claude Haiku 4.5 is highlighted as Anthropic's safest model to date, achieving an AI Safety Level 2 (ASL-2) classification due to low rates of concerning and misaligned behaviors. The model is immediately available via the Claude API, Amazon Bedrock, and Google Cloud's Vertex AI, with competitive pricing for input and output tokens.