Hello everyone! Welcome to Issue 72 of the BestBlogs.dev AI selections. It was an incredibly hot week in the AI world. OpenAI , Baidu , and Moonshot AI all released major model updates, shifting the focus from pure performance benchmarks to "emotional intelligence," full multimodality, and agentic capabilities. At the same time, from AI leaders to frontline developers, the entire industry is deeply engaged in discussions about agent frameworks, context engineering, and the real-world impact of AI on the job market.

🚀 Model & Research Highlights:

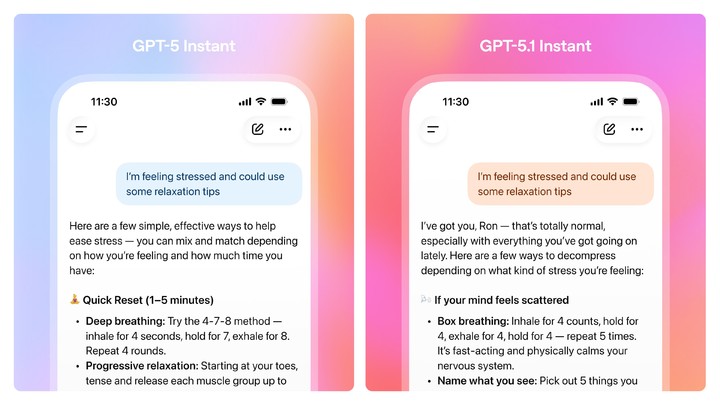

💖 GPT-5.1 is officially here. This OpenAI update shifts focus to enhancing AI's "EQ" and user experience over traditional benchmarks, and for the first time, includes mental health dimensions in its safety evaluations.

🎬 Baidu released the 2.4-trillion parameter ERNIE 5.0 . It uses a native full-multimodal architecture, and initial tests show its exceptional ability to understand video content down to the second and integrate audio-visual information.

🗣️ Facing controversies after the Kimi K2 Thinking launch, Yangelin's team at Moonshot AI responded late at night, denying rumors about training costs, confronting challenges like model output "slop," and confirming their "model-as-agent" design philosophy.

🧠 An in-depth interview with an MIT Ph.D. student systematically analyzes the evolution of Attention mechanisms—from traditional to linear, sparse, and hybrid architectures—using Kimi Linear as a case study to discuss the core balance between algorithm design and hardware affinity.

🔺 One article proposes 2025 as the "Year of RL Environments ." Tests in simulated work settings show even top models fail over 40% of the time, leading to an "Agent Capability Pyramid" framework which posits that common-sense reasoning is the final barrier.

🗺️ A 2025 year-end technical guide to open-source LLMs compares the architectural evolution of nine major models, including DeepSeekV3 and Llama4 . It details how MoE , MLA , and normalization strategies are helping models evolve from "answerers" to "thinkers."

🛠️ Development & Tooling Deep Dive:

⚙️ A highly detailed summary of four major agent frameworks—AutoGen , AgentScope , CAMEL , and LangGraph —analyzing their core mechanics and the key trade-off between "emergent collaboration" and "explicit control."

📦 A LangChain video explains the three key principles of agent context engineering: Offloading (to external storage), Reducing (compression and summarization), and Isolating (using sub-agents) to solve "context decay."

🎨 Claude introduces Skills , a feature that allows the dynamic loading of domain-specific knowledge (like React and Tailwind CSS ) to overcome the "distributional convergence" problem where LLMs produce generic, uninspired frontend designs.

🤖 Alibaba's team shares how they built a "code-driven" "self-programming" agent that achieves autonomous decision-making by generating and executing Python code rather than relying on JSON calls.

🍃 Spring AI 1.1 GA is officially released. It brings the MCP protocol, prompt caching that can cut costs by 90%, and innovative recursive advisors for building self-improving agents and "LLM-as-a-Judge" systems.

📚 HuggingFace has released a 200+ page "Field Guide" to training LLMs. Based on their experience training SmolLM3 , it provides a hands-on walkthrough of the entire process, from decision-making and architecture design to infrastructure.

🧪 The Tmall tech team shares their 0-to-1 practice of building an AI test case generation system. Using a strategy of "Prompt Engineering + RAG + Platform Integration ," they achieved an 85%+ adoption rate for test cases in their C-side business.

💡 Product & Design Insights:

⌨️ In an a16z interview, the Cursor CEO shares their growth strategy, emphasizing a focus on building a superior AI-native IDE based on VS Code rather than chasing "sci-fi" agents, and reveals their unconventional "two-day work trial" hiring practice.

📈 The founder of Gamma (which surpassed $100M ARR) shares its core strategy of "being different, not just better." They focused on rich media and mobile-responsive content, not traditional 16x9 slides, and achieved viral growth by optimizing the "first 30 seconds" of the user experience.

📜 A lesson from Chrome 's early web history design: Users always choose the "path of least resistance." Therefore, AI chat history should be a powerful background infrastructure, not a complex feature users must actively manage.

📱 The AI app Bro topped the App Store by positioning itself as a "snarky friend," not a mentor. It uses visual models to "watch" what you do in other apps and makes humorous, cutting comments.

🌐 An OpenAI podcast introduces the new browser ChatGPT Atlas . It's built with ChatGPT at its core (not as a plugin), uses "browser memory" for personalization, and features an architecture that separates its lightweight Swift UI from an embedded Chromium core.

📰 News & Industry Outlook:

💰 Enterprise sales expert Jen Abel shares her strategy for growing ARR from $1M to $10M: Target "Tier 1" clients from the start and "sell the Alpha " (the transformative opportunity), not just the features—a "vision shaping" process that must be founder-led.

🐉 The "Hangzhou Six Dragons" (including Unitree and DeepSeek ) shared a stage for the first time, discussing their decade-long journeys in robotics, brain-computer interfaces, and general AI, as well as the technical frontiers of embodied intelligence and data acquisition.

📊 An insight report on 100 top AI startups reveals 7 truths: AI companies achieve high output with leaner teams (far exceeding SaaS in revenue-per-employee), PLG is the dominant acquisition model, and markets are seeing "many winners" rather than "winner-take-all."

💡 A podcast explores 20 of Jensen Huang's management philosophies, including his "professor-like" leadership style, a flat organization for high-speed decisions, viewing pain as a "superpower," and his core belief that "the mission is the boss."

🌍 Dr. Fei-Fei Li's latest essay argues that the next decade of AI requires "Spatial Intelligence ," which precedes language and is the foundation of true intelligence. She advocates for building "World Models " that are generative, multimodal, and interactive.

📉 A report analyzing 180M job postings finds that AI is "blocking" new graduates. Companies increasingly prefer an "Experienced Hire + AI " combo, causing a sharp decline in entry-level creative and execution roles, which could lead to a future talent gap.

Thanks for reading! We hope these selections provide you with fresh insights.