Hey there! Welcome to BestBlogs.dev Issue #74.

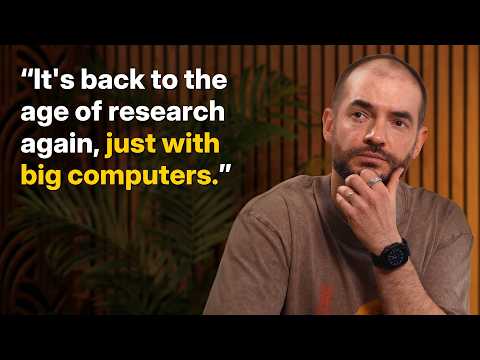

This week, Ilya Sutskever sat down with Dwarkesh Patel for a fascinating conversation, declaring that AI is shifting from the scaling era into the research era . While everyone's asking how to throw more compute at the problem, Ilya offers a counterintuitive answer: the bottleneck isn't GPUs anymore—it's ideas. He points to generalization as the fundamental weakness of current models. AI systems that ace competitive benchmarks still get stuck in loops on simple tasks. It raises an old question: do we need bigger scale, or deeper understanding?

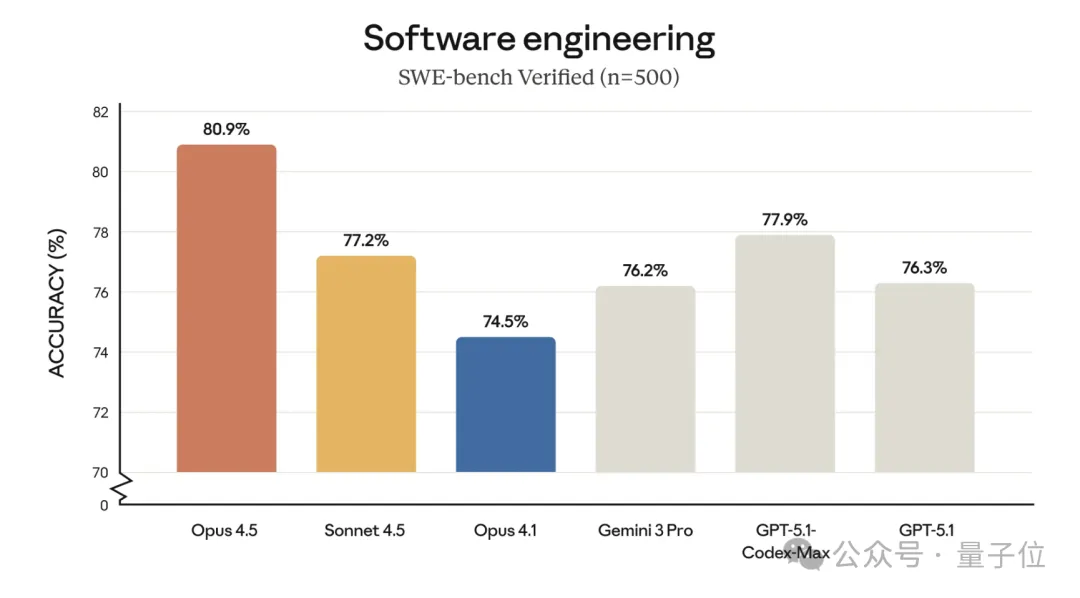

Anthropic also dropped Claude Opus 4.5 this week, outperforming humans on internal engineering hiring tests with significant improvements in agentic capabilities and visual reasoning. I took the opportunity to revisit BestBlogs.dev's design and architecture using Opus 4.5, converting the site to static pages and stripping away unnecessary interactive elements. The goal: focus on reading, minimize distractions.

Here are 10 highlights worth your attention this week:

🔬 Ilya Sutskever describes the puzzling "jaggedness" of current models—they can write papers and solve math problems, yet repeat the same sentence twice. He attributes this to RL over-optimizing for evaluation metrics, arguing that generalization is the real bottleneck on the path to superintelligence.

🤖 Claude Opus 4.5 launches with superhuman performance on engineering tests. It features an "effort" parameter that lets users dial compute allocation based on task complexity. A deep dive into Claude Agent Skills reveals how the system uses prompt extensions rather than traditional code to enhance AI capabilities—a meta-tool architecture worth understanding.

🎨 Two notable releases in image generation. FLUX.2 ships with a completely rebuilt architecture, and the Diffusers team offers 4-bit quantization and other optimizations for consumer GPUs. Google's Nano Banana Pro excels at multilingual text rendering with search-augmented generation—think menus with real-time prices—plus one-click high-quality PPT generation.

📁 LangChain proposes using filesystems for agent context management: offload tool outputs to temporary storage, then use grep and glob for precise retrieval. This cuts token consumption while improving reliability on complex tasks. Atlassian's AI lead argues that taste, knowledge, and workflow are the keys to fighting "AI slop."

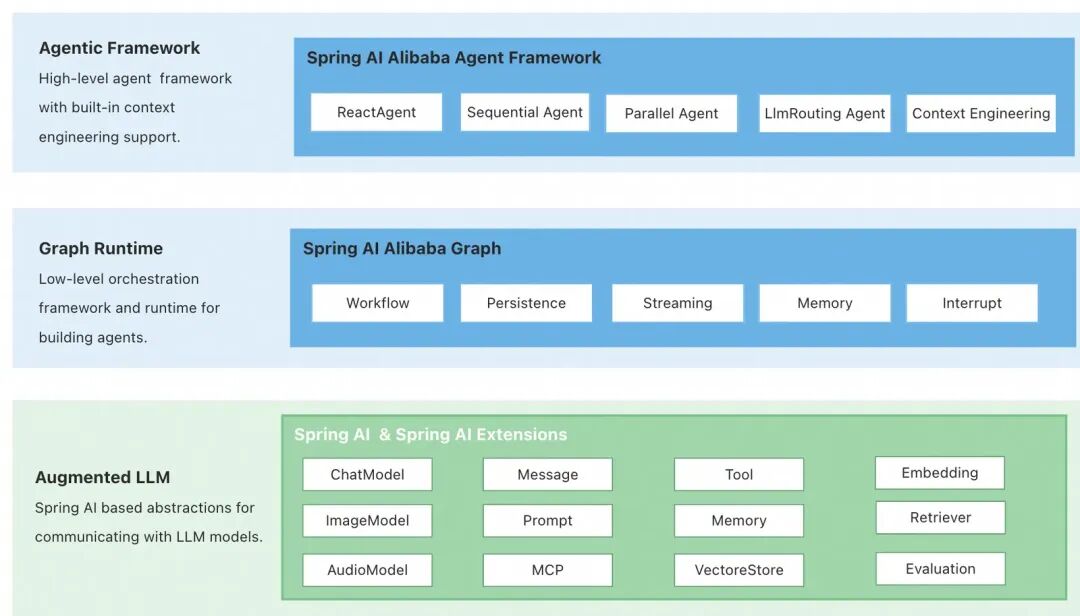

☕ Spring AI Alibaba 1.1 brings the Java ecosystem into the Agentic AI era. The release introduces ReAct-based agents and Graph workflow orchestration, with standardized Hooks and Interceptors for message compression and human-in-the-loop intervention—enterprise-grade AI tooling out of the box.

📊 Jellyfish analyzed 20 million PRs and found that full AI coding tool adoption doubles PR throughput and cuts cycle time by 24%. But architecture matters: centralized codebases see up to 4x gains, while distributed systems barely benefit due to context fragmentation. Another data point: autonomous agents currently contribute less than 2% of merged code.

📈 Lovable's growth lead Elena Verna argues that AI-native companies face a rewritten growth playbook: PMF is now a weekly validation target, traditional SEO and paid channels are broken, and shipping daily is table stakes. Her core thesis: brand equals product experience, and retention—not acquisition—is the only metric that matters.

🏆 Google stages a comeback with Gemini 3 , using sparse MoE architecture and TPU co-design to slash inference costs to 1/10 of competitors. The LLM landscape has officially become a three-way race between Google, OpenAI, and Anthropic. Meanwhile, Generative UI hints at a future where AI creates interfaces, not just content.

👨💼 Engineering leadership faces new challenges in the AI era. The Jevons paradox means AI won't replace engineers—it'll create more demand. But the automation paradox means the work gets harder. Leaders should watch out for AI disrupting junior talent pipelines: when newcomers can complete basic tasks with AI, how do they build foundational understanding?

🧩 A sharp analysis offers a strategic lens: Grammarly's evolution from grammar checker to comprehensive Agent platform, and the Bundle theory—irreplaceability determines pricing, not usage. AI will make capabilities flow like shipping containers, and careers may shift toward Hollywood-style project work.

Hope this issue sparks some new ideas. Stay curious, and see you next week!

.png)