Hey there! Welcome to BestBlogs.dev Issue #76.

The most thought-provoking idea I encountered this week came from Anthropic's AI Engineer conference talk: stop building agents, start building skills. Their core argument? Current agents have intelligence but lack domain expertise—like asking a brilliant mathematician to do your taxes. Smart, yes. Experienced, no. Skills are essentially folders packaging procedural knowledge, supporting version control, team sharing, and seamless MCP integration. Coincidentally, MCP officially moved under Linux Foundation governance this week, racking up 37,000 stars in just 8 months—clear validation of the hunger for standardization. I wonder: as the "model + runtime + skill library + MCP" architecture takes shape, could Skills become the universal capability carrier across models and products, much like Docker images? This might be the pivotal moment when AI development shifts from reinventing wheels to assembling standardized components.

Here are 10 highlights worth your attention this week:

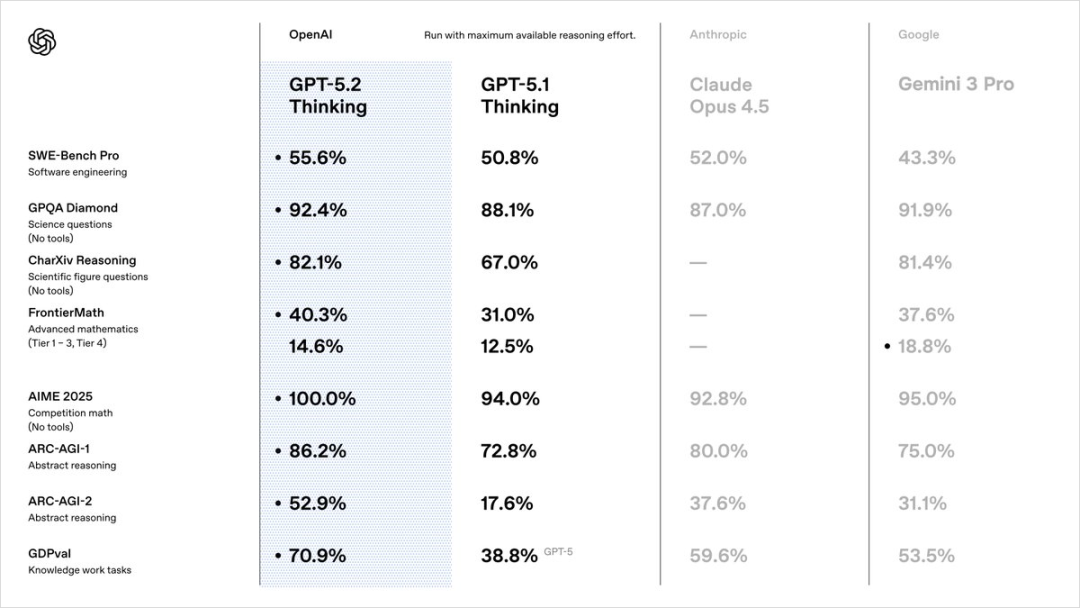

🤖 GPT-5.2 dropped, and OpenAI is positioning it as "AI for the working professional." Not just for programmers anymore—it's targeting lawyers, designers, and marketing managers. In evaluations across 44 real-world professional tasks, over 70% of outputs matched or exceeded the quality of experts with 14 years of experience. Pragmatic iteration, real-world focus.

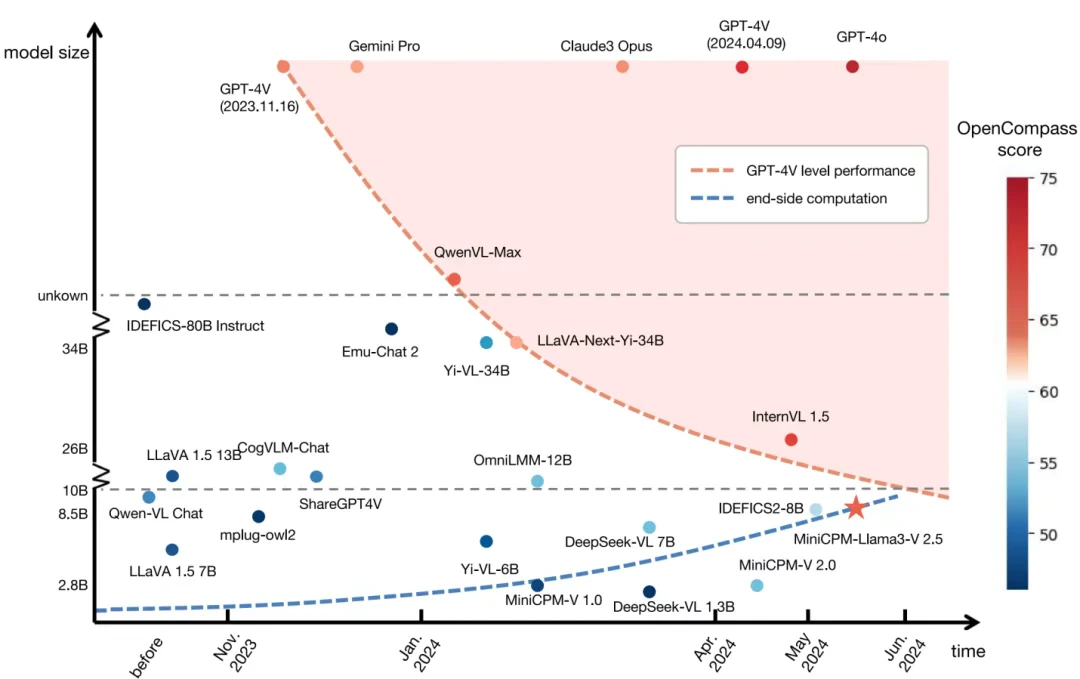

🔬 Tsinghua's density law research, published in Nature Machine Intelligence, reveals AI's own "Moore's Law": training and inference efficiency doubles every 3.5 months. This explains how edge models are catching up to cloud giants—and predicts smartphones running self-learning personal LLMs by 2027.

📊 Stanford's study of 120,000 developers found a 0.40 correlation between codebase health and AI ROI. Clean code amplifies AI benefits; technical debt accelerates entropy. The kicker: a 14% PR increase might mask a 9% quality drop and 2.5x more rework—potentially negative ROI overall.

💡 Manus founder Zhang Tao finally addressed external skepticism, articulating the core philosophy: "Less structure, more intelligence." Zero Predefined Workflow hands task decisions entirely to the model, maintaining benchmark leadership. Application-layer teams can compete with OpenAI through model selection flexibility alone.

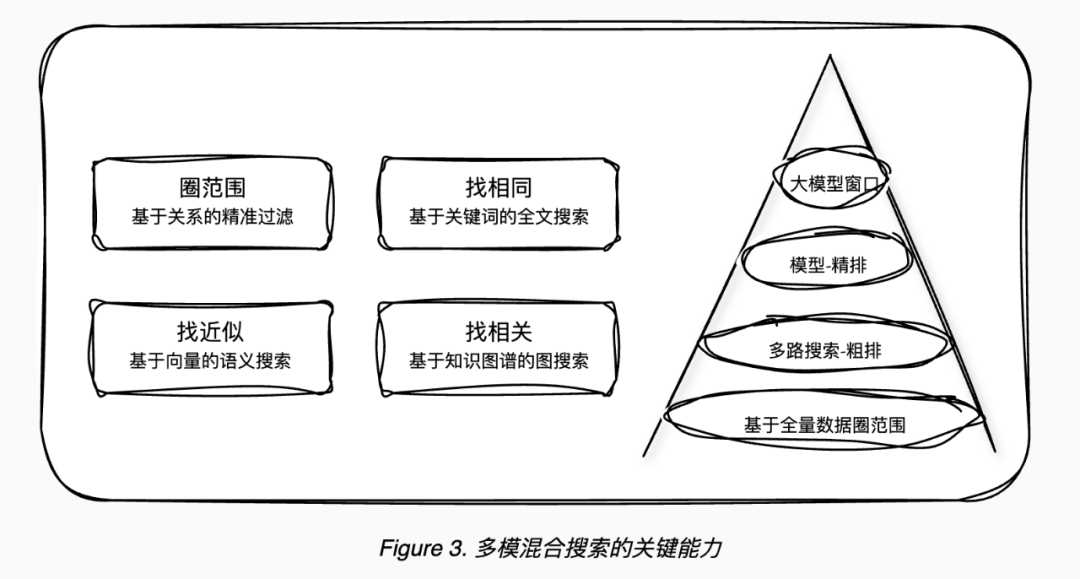

🌐 a16z's 2026 predictions argue Agent-native infrastructure will become essential, with the core challenge shifting from compute to multi-agent coordination. The bigger insight: 99% of market opportunity lies in traditional verticals, not Silicon Valley tech circles. Enterprise software value moves from systems of record to intelligent execution layers.

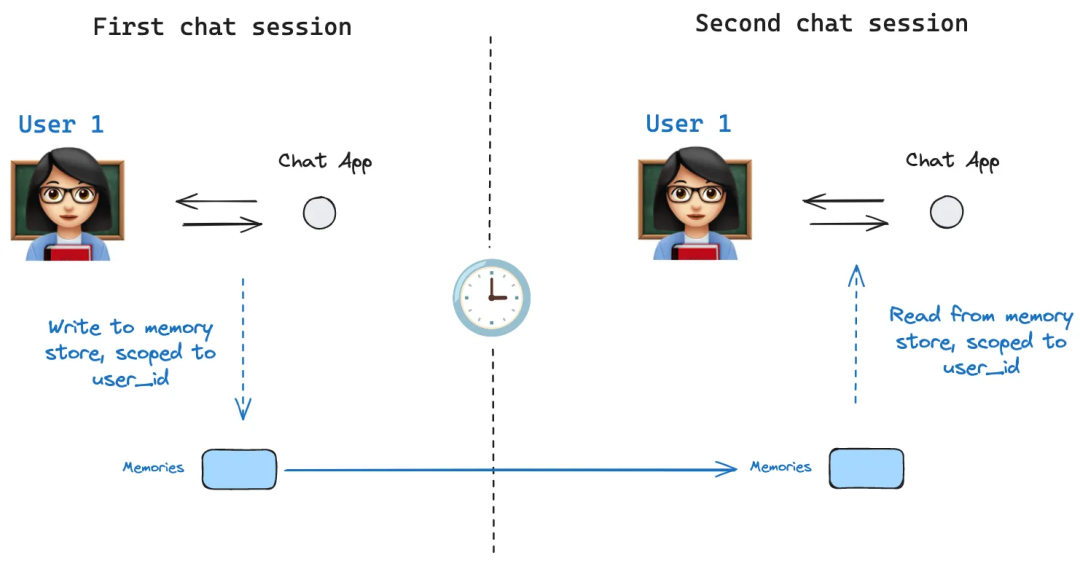

🛠️ Tencent's engineering team published a comprehensive guide to persistent memory for AI agents. Using LangGraph, short-term memory leverages Checkpointer for single-session state; long-term memory uses Store for cross-session knowledge sharing. From InMemorySaver to PostgreSQL persistence to semantic search—complete with working code examples.

🎨 Zhipu open-sourced the GLM-4.6V series, with native Function Call capabilities baked into the vision model—"image as parameter, result as context" multimodal tool calling. The 9B Flash version outperforms Qwen3-VL-8B, API pricing dropped 50%, fully open source.

📈 Dify founder Lu Yu unpacked two years of startup lessons behind their 110k+ GitHub stars. Commitment to engineering value and model neutrality, pragmatic transition from high-code to intelligence, plus unexpected success in Japan. Deep understanding of AI applications' "last mile" problem.

🏆 OpenRouter and a16z's joint report, based on 100 trillion tokens of real usage data, reveals key shifts: Chinese open-source model share exploded from 1.2% to nearly 30%; reasoning-optimized models now exceed 50% of traffic; coding dominates over half of total usage. The "Cinderella slipper effect" is worth noting—model retention depends on perfectly solving a specific pain point at launch.

🧩 Investor Zhu Xiaohu's year-end AI industry review: no bubble in sight for at least three years. Competition has shifted from model capability to the battle for super-entry points. His advice for founders? "Diverge 15 degrees from consensus"—focus on vertical niches and grunt work that big tech won't touch.

Hope this issue sparks some new ideas. Stay curious, and see you next week!