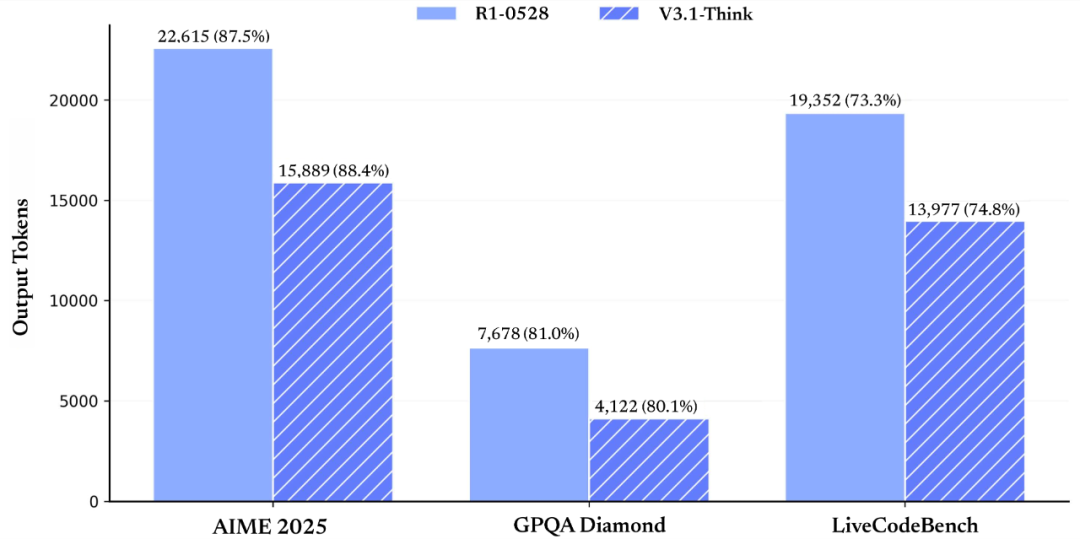

DeepSeek officially released the V3.1 model, highlighting an innovative hybrid reasoning architecture. This allows the model to simultaneously support and freely switch between 'reasoning mode' and 'non-reasoning mode'. Through post-training optimization, the new model demonstrates significantly enhanced performance in programming agent (SWE, Terminal-Bench) and search agent (browsecomp, HLE) tasks. Regarding thinking efficiency, V3.1-Think mode reduces output tokens by 20%-50% while maintaining performance, improving response speed and offering potential cost benefits and resource optimization. The API service has been upgraded synchronously, extending the context to 128K and supporting Function Calling in strict mode, as well as the Anthropic API format. Furthermore, the Base model and post-training model of DeepSeek-V3.1 are now open-sourced on Hugging Face and ModelScope. The article also notes that the API price will be adjusted on September 6, 2025, potentially affecting users' long-term costs and strategies.